Brains and AI

Distributed Representations of Words and Phrases and their Compositionality

Presented by Joycelyn Yiu

What are distribution representations of words? Since symbols have no internal structure, researchers tries to represent words with vectors such that words that are similar to each other are closer in the vector space and vice versa.

The inspiration for this idea comes from 1980’s, to convey semantics of language to machines. These representations can capture patterns and relationships between words like synonyms and grammar rules. How do we capture these patterns in practice?

Skip-Gram model

The skip gram model is a method that teaches a computer to understand the meaningful of words by reading large pieces of text. It basically learns the word relationships by predicting the surrounding words for a given word.

In essence, from the training text, we compute the probability of context words occurring after a center word. How do you learn a distribution over words?

The authors of the paper replaced softmax with a simpler one called Noise Contrastive Estimation NCE) that improved training and quality of representations.

This model typically does not work well for rare words.

In addition to the vanilla model, they added extension to represent phrases. Let us delve into each of these contributions.

Method

Hierarchical softmax

The authors introduced hierarchical softmax that brought down the number of evaluations to \(\log_2(W)\) nodes rather than the typical \(W\) output nodes (where \(w\) is the number of words in the vocabulary).

Is this used in LLMs? LLMs have become ubiquitous because of their parallelizability, and this takes it away to some extent.

Negative Sampling

Introduced by Guzman and Hyvarinen, NCE tries to differentiate data from noise using logistic regression.

Subsampling of Frequent Words

Common words like “the”, “a”, etc. occur frequently in text and do not provide a lot of information as compared to rare words. To counter this imbalance, the authors introduced a frequency based discard probability for the words to subsample the words. This improves the training speed and the accuracy of rare words.

Learning phrases

The simple addition the authors did was to create new tokens for phrases like “The New York Times” - increasing the vocabulary size potentially making it unscalable. However, iteratively training the model considering longer phrases seemed to obtain a decent performance according to the authors.

These techniques can be added to any underlying neural network model.

Experiments

The authors noticed that the representations posses a linear structure that allowed vector arithmetic. This potentially is a consequence of the training objective - the word vectors are in a linear relationship with the inputs to softmax non-linearity.

Attention is all you need

Presented by Hansen Jin Lillemark

What is intelligence? We represented words with high-dimensional vectors, and we seemed to have cracked natural language? How does the brain understand and represent concepts?

Language is one of the easiest avenues to test our algorithms of artificial intelligence. We have stored a lot of data in the past few decades, and this set up a good foundation to train models here.

Transformers, inspired from some brain mechanisms, have become the go-to mechanism for most of the ML applications. It starts off with the language modeling task where the task is to predict the next word \(x_t\) give the previous words in the sentence. This very general task was first introduced by Shannon to define information and entropy.

Domains like mathematics have deterministic distributions whereas reasoning usually has a flatter distribution. Another issue with language is that it keeps changing with time, and the models need to be dynamic enough to maintain this.

Method

Continuing from the Word2Vec paper, we represent words with vector embeddings.

The embedding size is essentially like PCA - decomposing meanings into a low-dimensional space. Although there are over hundred thousand words, we are representing them in 500 dimensions?

Can we create different similarity kernels to create different meanings? This effect is achieved through multi-head attention.

Different language to language translation is possible with LLMs. Is there an inherent universal language?

LLMs are able to reason well in some scenarios. Try asking it a novel puzzle and see how it does. However, it struggles with math. Is there something we are missing?

Decoder inference is still sequential… Training seems more efficient but inference…

Bias-Variance Tradeoff

This topic seems like a thing of the past. We have built very large models around large amounts of data, and everything seems to work. So, why worry about bias or variance? Bias is solved by large models and variance is solved by large data. If all’s good in the world, then why don’t we have zero loss?

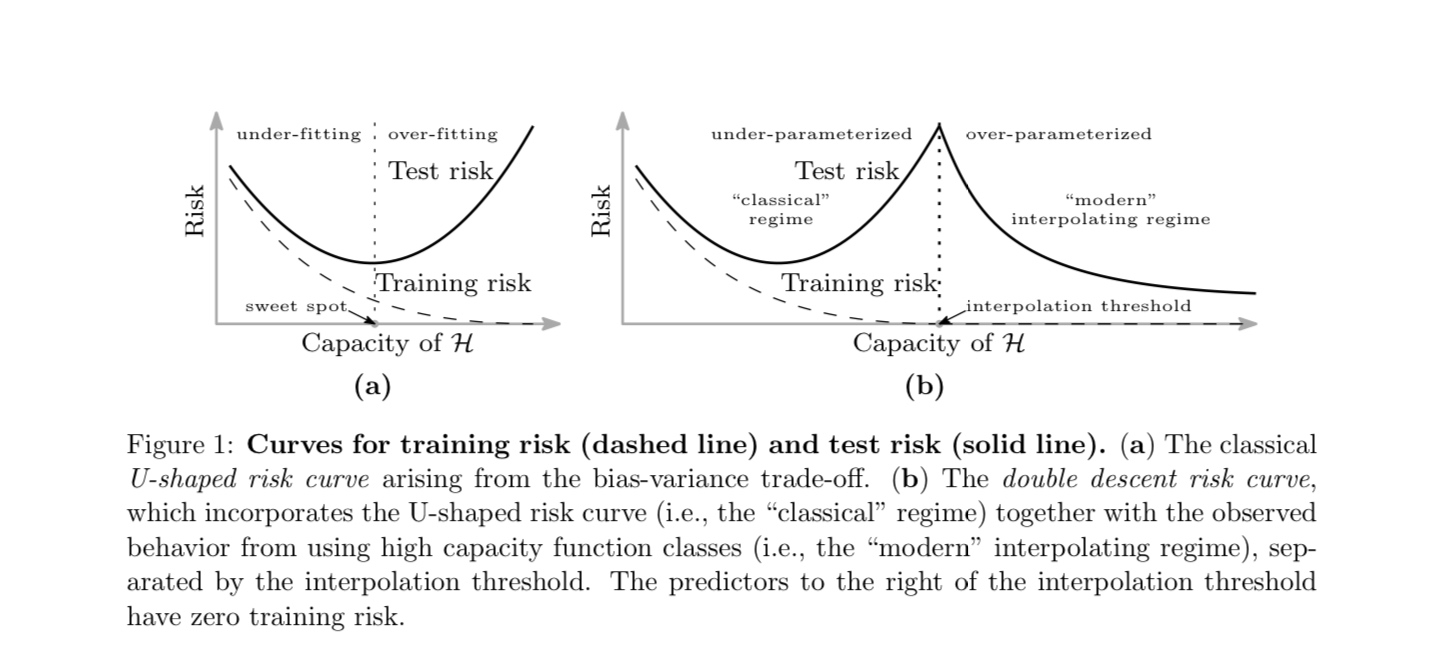

Recap: The bias-variance trade-off. When low complexity models cannot inherently capture the complexity of the data, they have a bias or they underfits the training data. It can arise from a poor architecture or features engineered from the data. On the other end, complex models with large amount of parameters may fit the training data really well, but they tend to perform poorly on the test loss. This error, called variance error, occurs due to overfitting. in these cases, models are said to not generalize well across different data distributions. This trade-off between bias and variance is still present at the core of modern models.

To counter these problems, early approaches involved regularizing models to prefer solutions with “simple” patterns - ones that have low-norm weights even in over-parameterized models. There are modern theoretical frameworks such as the Neural Tangent Kernels (NTK) that show that over-parameterized networks behave like kernel machines and converge to smooth solutions due to regularization. That is, interpolating regularized solutions seems to generalize well.

I also mentioned poor model architecture as a source of these errors. Over-parameterized models can often be replaced with models designed with a good inductive bias - models that leverage the structures in the data to generate structured solutions. For example, CNNs leveraged that images have spatial-features, and replaced fully-connected layers with convolutional kernels that greatly reduced the number of parameters.

Model architectures designed with inductive bias are the best kind of models. Attention is also a product of such design philosophies.

So far, we have

- Maybe over-parameterization works but we need to have appropriate regularization tricks such as dropout and weight decay

- Adaptive optimizers, early stopping, large batch training all seem to make sense

In 2018, Belkin et al., showed that test loss follows a “double descent” curve - it peaks at a critical model complexity, then decreases. This phenomenon has been seen to occur in CNNs, Transformers, and linear models with high dimensional features. The take away message is that more complexity does not mean worse generalization in the modern architectures.

How does data fit in all this? More the amount of data, the simpler the model becomes - we have seen that the inner layers of LLMs require sparse changes in the weights to fine-tune to different datasets (LoRA). It maybe seen as if large datasets prevent overfitting since larger models are able to absorb the noise in the data without harming the underlying signal.

In 2019, Bartlett et al., showed that models can memorize noisy data but still generalize if noise is structured or data has low intrinsic dimensions. High-dimensional mode old can separate signal from noise via implicit regularization. At the core of some of the large models we have built, we made a rather huge assumption - the noise in the data is Gaussian. The MSE loss is nothing but a negative likelihood over Gaussian noise. These assumptions must be carefully considered while building models for different applications.

So what do we make of all this? It’s new information that we didn’t have before while designing models. Maybe it’s because of this the model scaling laws are working.

Benign overfitting in linear regression

The ultimate goal of machine learning is how to train a model that fits to a given data. We are approaching this by reducing the empirical training risk/error through a loss function. There is a mis-match between what are doing and the goal we are trying to achieve - reducing the test loss or generalize well to new data. Let us understand this better.

A model’s ability to fit a wide variety of functions or patterns in the data is a known as its capacity. As we have increased the models’ capacity, we seemed to have the cross the peak in the double descent curve - they are over-fitting but it seems to be benign. That is, they seem to have zero training risk and the test risk approaches the best possible value. Why do we think this is benign? As the model capacity increases, the test loss seems to be decreasing even more. So how do we reach this benign overfitting region?

The authors tested this with linear regression and significant over-parameterization. For a linear regression model the minimum norm solution is given by

\[\hat \theta = X^T = (XX^T)^{-1}y \; X\theta = y\]The authors define the excess risk of the estimator as

\[T(\theta) := \mathbb E_{x, t} [(y - x^T \theta)^2 - (y - X^T \theta^*)^2]\]How do you over-parameterize a linear regression model? The authors consider the number of eigenvectors of the covariance of the data. They consider quantities from the PCA theory. They concluded that if the decay of the eigenvalues of the covariance is sharp, then we can reach the benign overfitting region.

Enjoy Reading This Article?

Here are some more articles you might like to read next: