DiBS Notes

Lecture 1

03/01/2022

~Chapter 1: Introduction

- Embedded databases - Databases which don’t have high amount of concurrent access, but is mostly used by a single user. These databases are implemented by SQLite in general.

-

Motivation for Database systems -

- Atomicity

- Concurrency

- Security

- …

Data Models

A collection tools for describing the data, relationships in the data, semantics and other constraints.

We start using the relational models that are implemented using SQL. Then, we shall study Entity-Relationship data model. These are used for database design. We will briefly touch upon Object-based data models. Finally, we shall see Semi-structured data model as a part of XML.

There are also other models like Network model and Hierarchical model (old) etc.

Relational Model

All the data is stored in various tables. A relation is nothing but a table. Back in the 70’s, Ted Codd (Turing Award 1981) formalized the Relational Model. According to his terminology, a table is same as a relation, a column is also called an attribute, and the rows of the table are called as tuples. The second major contribution he made was introducing the notion of operations. Finally, he also established a low-level database engine that could execute these operations.

Some more terminology - Logical Schema is the overall logical structure of the database. It is analogous to the type information of a variable in a program. A physical schema is the overall physical structure of the database. An instance is the actual content of the database at a particular point in time. It is analogous to the value of a variable. The notion of physical data independence is the ability to modify the physical schema without changing the logical schema.

Data Definition Language (DDL)

It is the specification notation for defining the database schema. DDL compiler generates a set of table templates stored in a data dictionary. A data dictionary contains metadata (data about data) such as database schema, integrity constraints (primary key) and authorization.

Data Manipulation Language (DML)

It is the language for accessing and updating the data organized by the appropriate data model. It is also known as a query language. There are basically two types of data-manipulation languages - Procedural DML requires a user to specify what data is needed and how to get that data; Declarative DML requires a user to specify what data is need without specifying how to get those data. Declarative/non-procedural DMLs are usually easier to learn.

SQL Query Language

SQL query language is non-procedural! It is declarative. SQL is not a Turing machine equivalent language. There are extensions which make it so. SQL does not support actions such as input from the users, typesetting, communication over the network and output to the display. A query takes as input several tables and always returns a single table. SQL is often used embedded within a higher-level language.

Database Design involves coming up with a Logical design and Physical design.

A Database Engine accepts these queries and parses them. It is partitioned into modules that deal with each of the responsibilities of the overall system. The functional components of a database system can be divided into

-

Storage manager - Actually stores the data. It takes the logical view and maps it to the physical view. It is also responsible to interact with the OS file manager for efficient storing, retrieving and updating of data. It has various components such as authorization and integrity manager, transaction manager, file manager and buffer manager. It implements several data structures as a part of the physical system implementation - data files, data dictionary and indices.

-

Query processor - It includes DDL interpreter, DML compiler (query to low-level instructions along with query optimization) and the query evaluation engine.

Query processing involves parsing and translation, optimization, and evaluation. Statistics of the data are also used in optimization.

-

Transaction management - A transaction is a collection of operations that performs a single logical function in a database application. The transaction management component ensures that the database remains in a consistent state despite system failure. The concurrency control manager controls the interaction among the concurrent transactions to ensure the consistency of the data.

Lecture 2

04-01-22

Database Architecture

There are various architectures such as centralized databases, client-server, parallel databases and distributed databases.

~Chapter 2: Intro to Relational Model

Attributes

The set of allowed values for each attribute is called the domain of the attribute. Attribute values are required to be atomic - indivisible. Todd realized that having sets or divisible attributes complicates the algebra. The special value null is a member of every domain that indicates the value is unknown. The null values causes complications in the definition of many operations.

Relations are unordered. The order of tuples is irrelevant for the operations logically.

Database Schema - The logical structure of the database.

Keys

K is a superkey of the relation R if values for K are sufficient to identify a unique tuple of each possible relation \(r(R)\). Superkey \(K\) is a candidate key if \(K\) is minimal. One of the candidate keys is selected to be the primary key. A foreign key constraint ensures that the value in one relation must appear in another. There is a notion of referencing relation and a referenced relation.

Relational Query Languages

Pure languages include Relational algebra, Tuple relational calculus and Domain relational calculus. These three languages are equivalent in computing power.

Lecture 3

06/01/2022

Relational Algebra

An algebraic language consisting of a set of operations that take one or two relations as input and produces a new relation as their result. It consists of six basic operators -

- select \(\sigma\)

- project \(\Pi\)

- union \(\cup\)

- set difference \(-\)

- Cartesian product \(\times\)

- rename \(\rho\)

We shall discuss each of them in detail now.

Select Operation

The select operation selects tuples that satisfy a given predicate. So, it’s more like where rather than select in SQL. The notation is given by \(\sigma_p(r)\).

Project Operation

A unary operation that returns its argument relation, with certain attributes left out. That is, it gives a subset of attributes of tuples. By definition, it should only return the attributes. However, in most cases we can return modified attributes. The notation is given by \(\Pi_{attr_1, attr_2, ...}(r)\)

Composition of Relation Operations

The result of a relational-algebra is a relation and therefore we different relational-algebra operations can be grouped together to form relational-algebra expressions.

Cartesian-product Operation

It simply takes the cartesian product of the two tables. Then, we can use the select condition to select the relevant (rational) tuples.

The join operation allows us to combine a select operation and a Cartesian-Product operation into a single operation. The join operation \(r \bowtie_\theta s = \sigma_\theta (r \times s)\). Here \(\theta\) represents the predicate over which join is performed.

Union operation

This operation allows us to combine two relations. The notation is \(r \cup s\). For this operation to be vald, we need the following two conditions -

- \(r, s\) must have the same arity (the same number of attributes in a tuple).

- The attribute domains must be compatible.

Why second?

Set-Intersection Operation

This operator allows us to find tuples that are in both the input relations. The notations is \(r \cap s\).

Set Difference Operation

It allows us to find the tuples that are in one relation but not in the other.

The assignment Operation

The assignment operation is denoted by \(\leftarrow\) and works like the assignment in programming languages. It is used to define temporary relation variables for convenience. With the assignment operation, a query can be written as a sequential program consisting of a series of assignments followed by an expression whose value is displayed as the result of the query.

The rename Operation

The expression \(\rho_x(E)\) is used to rename the expression \(E\) under the name \(x\). Another form of the rename operator is given by \(\rho_{x(A1, A2, ...)}(E)\).

Difference between rename and assignment? Is assignment used to edit tuples in a relation?

Are these set of relational operators enough for Turing completeness? No! Check this link for more info.

Aggregate Functions

We need functions such as avg, min, max, sum and count to operate on the multiset of values of a column of a relation to return a value. Functions such as avg and sum cannot be written using FOL or the relations we defined above. Functions such as min and max can be written using a series of queries but it is impractical. The other way of implementing this is to use the following

However, this definitive expression is very inefficient as it turns a linear operation to a quadratic operation.

Note. The aggregates do not filter out the duplicates! For instance, consider $\gamma_{count(course_id)}(\sigma_{year = 2018}(section))$. What if a course has two sections? It is counted twice.

Group By Operation

This operation is used to group tuples based on a certain attribute value.

Equivalent Queries

There are more ways to write a query in relation algebra. Queries which are equivalent need not be identical.

In case of SQL, the database optimizer takes care of optimizing equivalent queries.

~Chapter 3: Basic SQL

Domain Types in SQL

-

char(n)- Fixed length character string, with user-specified length \(n\). We might need to use the extra spaces in the end in the queries too! -

varchar(n)- Variable length strings - …

Create Table Construct

An SQL relation is defined using the create table command -

create table r

(A_1, D_1, A_2, D_2, ..., A_n, D_n)

Integrity Constraints in Create Table

Types of integrity constraints

- primary key \((A_1, A_2, A_3, ...)\)

- Foreign key \((A_m, ..., A_n)\) references r

- not

null

SQL prevents any update to the database that violates an integrity constraint.

Lecture 4

10-01-22

Basic Query Structure

- A typical SQL query has the form:

select A1, A2, ..., An

from r1, r2, ..., rm

where P

where, \(A_i\) are attributes, \(r_i\) are relations, and \(P\) has conditions/predicates. The result of an SQL query is a relation. SQL is case-insensitive in general.

- We shall be using PostgreSQL for the rest of the course.

- SQL names are usually case insensitive. Some databases are case insensitive even in string comparison!

select clause

- To force the elimination of duplicates, insert the keyword

distinctafter select. Duplicates come from- Input itself is a multiset

- Joining tables

Removing duplicates imposes an additional overhead to the database engine. Therefore, it was ubiquitously decides to exclude duplicates removal in SQL.

-

The keyword

allspecifies that duplicates should not be removed. - SQL allows renaming relations and attributes using the

asclause. We can skipasin some databases like Oracle. Also, some databases allow queries with nofromclause.

from clause

If we write select * from A, B, then the Cartesian product of A and B is considered. This usage has some corner cases but are rare.

### as clause

It can be used to rename attributes as well as relations.

Self Join

How do we implement various levels of recursion without loops and only imperative statements? Usually, union is sufficient for our purposes. However, this is infeasible in case of large tables or higher levels of hierarchy.

String operations

SQL also includes string operations. The operator like uses patterns that are describes using two special character - percent % - Matches any substring and underscore _ matches any character (Use \ as the escape character). Some databases even fully support regular expressions. Most databases also support ilike which is case-insensitive.

Set operations

These include union, intersect and except (set difference). To retain the duplicates we use all keyword after the operators.

null values

It signifies an unknown value or that a value does not exist. The result of any arithmetic expression involving null is null. The predicate is null can be used to check for null values.

Lecture 5

11-01-22

Aggregate Functions

The having clause can be used to select groups which satisfies certain conditions. Predicates in the having clause are applied after the formation of groups whereas predicates in the where clause are applied before forming the groups.

Nested subqueries

SQL provides a mechanism for the nesting of subqueries. A subquery is a select-from-where expression that is nested within another query. The nesting can be done in the following ways -

-

fromclause - The relation can be replaced by any valid subquery -

whereclause - The predicate can be replaced with an expression of the formB <operation> (subquery)whereBis an attribute andoperationwill be defined later. - Scalar subqueries - The attributes in the

selectclause can be replaced by a subquery that generates a single value!

subqueries in the from clause

the with clause provides a way of defining a temporary relation whose definition is available only to the query in which the with clause occurs. For example, consider the following

with max_budget(value) as

(select max(budget))

from department)

select department.name from department, max_budget

where department.budget = max_budget.value

We can write more complicated queries. For example, if we want all departments where the total salary is greater than the average of the total salary at all departments.

with dept_total(dept_name, value) as

(select dept_name, sum(salary) from instructor group by dept_name)

dept_total_avg(value) as

(select avg(value) from dept_total)

select dept_name

from dept_total, dept_total_avg

where dept_total.value > dept_total_avg.value

subqueries in the where clause

We use operations such as in and not in. We can also check the set membership of a subset of attributes in the same order. There is also a some keyword that returns a True if at least one tuple exists in the subquery that satisfies the condition. Similarly we have the all keyword. There is also the exists clause which returns True if the tuple exists in the subquery relation. For example, if we want to find all courses taught in both the Fall 2017 semester and in the spring 2018 semester. We can use the following

select course_id from section as S

where semester = 'Fall' and year = 2017 and

exists (select * from section as T

where semester = 'Spring' and year = 2018

and S.course_id = T.course_id)

Here, S is the correlation name and the inner query is the correlated subquery. Correspondingly, there also is a not exists clause.

The unique construct tests whether a subquery has any duplicate tuples in its result. It evaluates to True if there are no duplicates.

Scalar Subquery

Suppose we have to list all the departments along with the number of instructors in each department. Then, we can do the following

select dept_name,

(select count(*) from instructor

where department.dept_name = instructir.dept_name as num_instructors

) from department;

There would be a runtime error if the subquery returns more than one result tuple.

Modification of the database

We can

-

delete tuples from a given relation using

delete from. It deletes all tuples without awhereclause. We need to be careful while using delete. For example, if we want to delete all instructors whose salary is less than the average salary of instructors. We can implement this using a subquery in thewhereclause. The problem here is that the average salary changes as we delete tuples from instructor. The solution for this problem is - we can compute average first and then delete without recomputation. This modification is usually implemented. -

insert new tuples into a give relation using

insert into <table> values <A1, A2, ..., An>. Theselect from wherestatement is evaluated fully before any of its results are inserted into the relation. This is done to prevent the problem mentioned indelete. -

update values in some tuples in a given relation using

update <table> set A1 = ... where .... We can also use acasestatement to make non-problematic sequential updates. For example,update instructor set salary = case when salary <= 1000 then salary *1.05 else salary*1.03 end

coalesce takes a series of arguments and returns the first non-null value.

Lecture 6

13-01-22

~Chapter 4: Intermediate SQL

Join operations take two relations and return as a result another relation. There are three types of joins which are described below.

Natural Join

Natural join matches tuples with the same values for all common attributes, and retains only one copy of each common column.

Can’t do self-join using this?

However, one must be beware of natural join because it produces unexpected results. For example, consider the following queries

-- Correct version

select name, title from student natural join takes, course

where takes.course_id = course.course_id

-- Incorrect version

select name, title

from student natural join takes natural join course

The second query omits all pairs where the student takes a course in a department other than the student’s own department due to the attribute department name. Sometimes, we don’t realize some attributes are being equated because all the common attributes are equated.

Outer join

One can lose information with inner join and natural join. Outer join is an extension of the join operation that avoids loss of information. It computes the join and then adds tuples from one relation that do not match tuples in the other relation to the result of the join. Outer join uses null to fill the incomplete tuples. We have variations of outer join such as left-outer join, right-outer join, and full outer join. Can outer join be expressed using relational algebra? Yes, think about it. In general, \((r ⟖ s) ⟖ t \neq r ⟖ (s ⟖t)\).

Note. \((r ⟖ s) ⟕ t \neq r ⟖ (s⟕t)\). Why? Consider the following

r | X | Y | s | Y | Z | t | Z | X | P |

| 1 | 2 | | 2 | 3 | | 3 | 4 | 7 |

| 1 | 3 | | 3 | 4 | | 4 | 1 | 8 |

-- LHS -- RHS

| X | Y | Z | P | | X | Y | Z | P |

| 1 | 2 | 3 | - | | 4 | 2 | 3 | 7 |

| 1 | 3 | 4 | 8 | | 1 | 3 | 4 | 8 |

Views

In some cases, it is not desirable for all users to see the entire logical model. For example, if a person wants to know the name and department of instructors without the salary, then they can use

create view v as select name, dept from instructor

A view provides a mechanism to hide certain data from the view of certain users. The view definition is not the same as creating a new relation by evaluating the query expression. Rather, a view definition causes the saving of an expression; the expression is substituted into queries using the view.

One view may be used in the expression defining another view. A view relation \(v_1\) is said to depend directly on a view relation \(v_2\) if \(v_2\) is used in the expression defining \(v_1\). It is said to depend on \(v_2\) if there is a path of dependency. A recursive view depends on itself.

Materialized views

Certain database systems allow view relations to be physically stored. If relations used in the query are updated, the materialized view result becomes out of date. We need to maintain the view, by updating the view whenever the underlying relations are updated. Most SQL implementations allow updates only on simple views.

Transactions

A transaction consists of a sequence of query and/or update statements and is atomic. The transaction must end with one of the following statements -

- Commit work - Updates become permanent

- Rollback work - Updates are undone

Integrity Constraints

not nullprimary key (A1, A2, ..., Am)unique (A1, A2, ..., Am)check (P)

check clause

The check(P) clause specifies a predicate P that must be satisfied by every tuple in a relation.

Cascading actions

When a referential-integrity constraint is violated, the normal procedure is to reject the action that caused the violation. We can use on delete cascade or on update cascade. Other than cascade, we can use set null or set default.

Lecture 7

17-01-22

Continuing the previous referential-integrity constraints. Suppose we have the command

create table person(

ID char(10),

name char(10),

mother char(10),

father char(10),

primary key ID,

foreign key father references person,

foreign key mother references person

)

How do we insert tuples here without violating the foreign key constraints? We can initially insert the name attributes and then the father and mother attributes. This can be done by inserting null in the mother/father attributes. Is there any other way of doing this? We can insert tuples by using the acyclicity among the tuples using topological sorting. There is also a third way which is supported by SQL. In this method, we can ask the database to defer the foreign key checking till the end of the transaction.

Complex check conditions

The predicate in the check clause can be an arbitrary predicate that can include a subquery (?) For example,

check (time_slot_id in (select time_slot_id from time_slot))

This condition is similar to a foreign key constraint. We have to check this condition not only when the ‘section’ relation is updated but also when the ‘time_slot’ relation is updated. Therefore, it is not currently supported by any database!

Built-in Data Types in SQL

In addition to the previously mentioned datatypes, SQL supports dates, intervals, timestamps, and time. Whenever we subtract date from a date or time from time, we get an interval.

Can we store files in our database? Yes! We can store large objects as

-

blob- Binary large object - Large collection of uninterpreted binary data (whose interpretation is left to the application outside of the database system). -

clobcharacter large object - Large collection of

Every database has its own limit for the maximum file size.

Index Creation

An index on an attribute of a relation is a data structure that allows the database system to find those tuples in the relation that have a specified value for that attribute efficiently, without scanning through all the tuples of the relation. We create an index with the create index command

create index <name> on <relation-name> (attribute):

Every database automatically creates an index on the primary key.

Authorization

We can assign several forms of authorization to a database

- Read - allows reading, but no modification of data

- Insert - allows insertion of new data, but not modification of existing data

- Update - allows modification, but not deletion of data

- Delete - allows deletion of data

We have more forms on the schema level

- Index - allows creation and deletion of indices

- Resources, Alteration

- Drop - allows deleting relations

Each of these types of authorizations is called a privilege. These privileges are assigned on specified parts of a database, such as a relation, view or the whole schema.

The grant statement is used to confer authorization.

grant <privilege list> on <relation or view> to <user list>

-- Revoke statement to revoke authorization

revoke select on student from U1, U2, U3

-

<user list>can be a user-id, public or a role. Granting a privilege on a view does no imply granting any privileges on the underlying relations. -

<privilege-list>may be all to revoke all privileges the revokee may hold. - If

<revoke-list>includes public, all users lost the privilege except those granted it explicitly. - If the same privilege was granted twice to the same user by different grantees, the user may retain the privilege after the revocation. All privileges that depend on the privilege being revoked are also revoked.

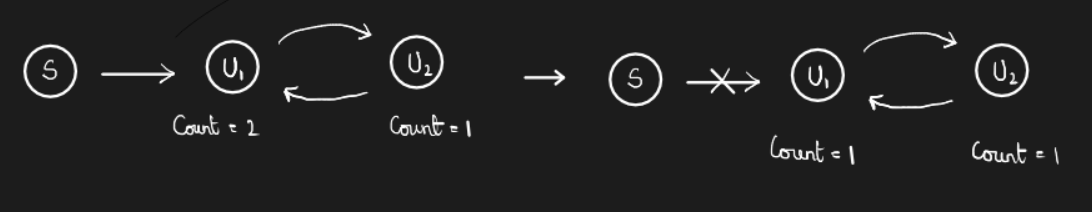

One of the major selling points of Java was a garbage collector that got rid of delete/free and automatically freed up unreferenced memory. This action is done via a counter which keeps a count of the variables that are referencing a memory cell. SQL uses a similar counter for keeping track of permissions of various objects. However, this approach fails in case of cycles in the dependency graph. For instance, consider the following situation

This problem does not occur in case of programming languages. The solution to this problem is TODO.

What about garbage collection when the program is huge? Is it efficient? Currently, many optimizations, like incremental garbage collection, have been implemented to prevent freezing of a program for garbage collection. Even after this, Java is not preferred for real-time applications. However, programmers prefer Java because of the ease of debugging and writing programs.

Roles

A role is a way to distinguish among various users as far as what these users can access/update in the database.

create a role <name>

grant <role> to <users>

There are a couple more features in authorization which can be looked up in the textbook.

We can also give authorization on views.

Other authorization features

-

references privilege to create foreign key

grant reference (dept_name) on department to Mariano -

transfer of privileges

grant select on department to Amit with grant option; revoke select on department from Amit, Satoshi cascade; revoke select on deparment from Amit, Satoshi restrict;

Lecture 8

18-01-22

~ Chapter 5: Advanced SQL

Programming languages with automatic garbage collection cannot clean the data in databases. That is, if you try using large databases, then your system may hang.

JDBC code

DriverManager.getConnection("jdbc:oracle:thin:@db_name") is used to connect to the database. We need to close the connection after the work since each connection is a process on the server, and the server can have limited number of processes. In Java we check the null value using wasNull() function (not intuitive).

Prepared statements are used to take inputs from the user without SQL injection. We can also extract metadata using JDBC.

SQL injection

The method where hackers insert SQL commands into the database using SQL queries. This problem is prevented by using prepared statements.

This lecture was cut-short, and hence has less notes.

Lecture 9

20-01-22

Functions and Procedures

Functions and procedures allow ‘business logic’ to be stored in the database and executed from SQL statements.

We can define a function using the following syntax

create function <name> (params)

returns <datatype>

begin

...

end

You can return scalars or relations. We can also define external language routines in other programming languages. These procedures can be more efficient than the ones defined in SQL. We can declare external language procedures and functions using the following.

create procedure <name>(in params, out params (?))

language <programming-language>

external name <file_path>

However, there are security issues with such routines. To deal with security problems, we can

- sandbox techniques - using a safe language like Java which cannot access/damage other parts of the database code.

- run external language functions/procedures in a separate process, with no access to the database process’ memory.

Triggers

When certain actions happen, we would like the database to react and do something as a response. A trigger is a statement that is executed automatically by the system as a side effect of a modification to the database. To design a trigger mechanism, we must specify the conditions under which the trigger is to be executed and the actions to be taken when the trigger executes. The syntax varies from database to database and the user must be wary of it.

The SQL:1999 syntax is

create trigger <name> after [update, insert, delete] of <relation> on <attributes>

referencing new row as nrow

referencing old row as orow

[for each row]

...

If we do not want the trigger to be executed for every row update, then we can use statement level triggers. This ensures that the actions is executed for all rows affected by a transaction. We use for each instead of for each row and we reference tables instead of rows.

Triggers need not be used to update materialized views, logging, and many other typical use cases. Use of triggers is not encouraged as they have a risk of unintended execution.

Recursion in SQL

SQL:1999 permits recursive view definition. Why do we need recursion? For example, if we want to find which courses are a prerequisite (direct/indirect) for a specific course, we can use

with recursive rec_prereq(course_id, prereq_id) as (

select course_id, prereq_id from prereq

union

select rec_prereq.course_id, prereq.prereq_id,

from rec_prereq, prereq

where rec_prereq.prereq_id = prereq.course_id

) select * from rec_prereq;

This example view, rec_prereq is called the transitive closure of the prereq relation. Recursive views make it possible to write queries, such as transitive closure queries, that cannot be written without recursion or iteration. The alternative to recursion is to write a procedure to iterate as many times as required.

The final result of recursion is called the fixed point of the recursive view. Recursive views are required to be monotonic. This is usually achieved using union without except and not in.

Advanced Aggregation Features

Ranking

Ranking is done in conjunction with an order by specification. Can we implement ranking with the knowledge we have currently? Yes, we can use count() to check how many tuples are ahead of the current tuple.

select *, (select count(*) from r as r2 where r2.t > r1.t) + 1 from r as r1

However, this is \(\mathcal O(n^2)\). Also, note that the above query implements sparse rank. Dense rank can be implemented using the unique keyword. Rank in SQL can be implemented using rank() over ([order by A desc]).

Ranking can be done within partitions within the dataset. This is done using partition by. The whole query is given by

select ID, dept_name, rank() over

(partition by dept_name order by GPA desc)

from dept_grades

order by dept_name, dept_rank

Multiple rank clauses can occur in a single select clause! Ranking is done after applying group by clause/aggregation. Finally, if we want only the top few ranks, we can use limit. However, this method is restrictive as we can’t select top-n in each partition and it is inherently non-deterministic. This is because ties are broken arbitrarily. It is usually better to select directly using the rank attribute by embedding the relation in an outer query.

Ranking has other function such as

-

percent_rankgives percentile -

cume_distgives fraction -

row_number(non-deterministic)

SQL:1999 permits the user to specify nulls first or nulls last.

For a given constant \(n\), the function ntile(n) takes the tuples in each partition in the specified order, and divides them into \(n\) buckets with equal number of tuples.

Windowing

Here are the examples of window specifications

between rows unbounded preceding and current

rows nbounded preceding

range between 10 preceding and current row

range interval 10 day preceding

-- Given a relation transaction

-- where value is positive for a deposite and

-- negative for a withdrawal, find total balance

-- of each account after each transaction on it

select account_number, date_time,

sum(value) over

(partition by account_number

order by date_time

rows unbounded preceding)

as balance

from transaction

order by account_number, date_time

We can perform windowing within partitions.

Lecture 10

24-01-22

We will cover one last concept in SQL and then move on to ER models.

OLAP

OLAP stands for Online Analytical Processing. It allows interactive analysis of data, allowing data to be summarized and viewed in different ways in an online fashion. Data that can be modeled as dimension attributes and measure attributes are called multidimensional data. Measure attributes measure some value that can be aggregated upon. Dimension attributes define the dimension on which measure attributes are viewed.

Items are often represented using cross-tabulation (cross-tab), also referred to as a pivot table. The dimension attributes form the row and column headers. The measure attributes are mentioned in each individual cell. Similarly, we can create a data cube which is a multidimensional generalization of a cross-tab. We can represent cross-tabs using relations. These can be used in SQL with null representing the total aggregates (despite the confusion).

The cube operation in SQL computes the union of group by’s on every subset of the specified attributes. The function grouping() can be applied on an attribute to check if the null value represents ‘all’ or not. It returns 1 if the value is a null value representing all. The rollup construct generates union on every prefix of a specified list of attributes. It can be used to generate aggregates at multiple levels of a hierarchy.

OLAP Operations

- Pivoting - Changing the dimensions used in a cross-tab

- Slicing - Creating a cross-tab for fixed values only. Sometimes called dicing when values for multiple dimensions are fixed.

- Rollup - Moving from finer-granularity data to a coarser granularity.

- Drill down - Opposite of rollup.

Early OLAP systems precomputed all possible aggregates in order to provide online response. Since this is infeasible, it suffices to precompute some aggregates and compute others on demand from pre-computed aggregates.

~Chapter 6: Database Design using the ER Model

How do we design schemas for a database? Is there any systematic way? We shall study this in the following chapter. The entity-relationship model proves useful in modelling the data.

When we design a database, we initially characterize the data needs of the database users. Then, we choose a data model to translate the requirements into a conceptual schema. The conceptual schema is designed using the ER model, and the implementation can be done in different ways such as the relation model. We do this in the final step where we move from an abstract data model to the implementation in the database.

Why do we care about good design? A bad design can have redundancy - repeats information which might cause data inconsistency and incompleteness which might make certain aspects of the enterprise difficult or impossible to model.

ER Model

Entity is a thing or an object in the enterprise that is distinguishable from other objects. It is described by a set of attributes. A relationship is an association among several entities. These models are represented graphically using the ER diagram.

Entity sets

An entity set is a set of entities of the same type that share the same properties. For example, it can be a set of all persons (each of which is an entity). A subset of the attributes form a primary key of the entity set.

Entity sets can be represented graphically using rectangles and attributes listed inside it. The primary keys are underlined.

Relationship sets

A relationship set is a mathematical relation among \(n \geq 2\) entities, each taken from entity sets.

\[\{(e_1, e_2, \dots, e_n \vert e_1 \in E_1, e_2 \in E_2, \dots, e_n \in E_n\}\]where \((e_1, e_2, \dots, e_n)\) is a relationship. We draw a line between the related entities to represent relationships. Relationship sets are represented using diamonds.

An attribute can also be associated with a relationship set. This is shown using a rectangle with the attribute name inside connected to the relationship set diamond with a dotted line.

Roles

The entity sets of a relationship need not be distinct. In such a case, we assign ‘roles’ to the entity sets.

Degree of a relationship set

The degree of a relationship set is defined as the number of entities associated with the relationship set. Most relationship sets are binary. We can’t represent all ternary relations as a set of binary relations.

There are no null values in the ER model. This becomes an issue in case of ternary relationships. Such problems are prevented in binary relationships. For example, think about the ER model of people and their parents. If someone has only one parent, then it is difficult to represent this using a ternary relationship between people, fathers and mothers. Instead, we could have two separate father and mother relationships. Binary relationships also provide the flexibility of mapping multiple entities to the same entity between two entity sets. While this is also possible in ternary relationships, we have more options in case of binary relationships.

Does ternary relationship convey more information than binary relationship in any case? Yes, that is why we can’t represent all ternary relations as a set of binary relations. For instance, think about the instructor, project and student mapping. There are many combinations possible here which can’t be covered using binary relationships.

Complex attributes

So far, we have considered atomic attributes in the relation model. The ER model does not impose any such requirement. We have simple and composite attributes. A composite attribute can be broken down into more attributes. For instance, we can have first and last name in name. We can have single-valued and multi-valued attributes. We can also have derived attributes. Multivalued attributes are represented inside curly braces {}.

Mapping cardinality constraints

A mapping cardinality can be one-to-one, one-to-many, many-to-one or many-to-many.

Lecture 11

25-01-22

We express cardinality constraints by drawing either a directed line \((\to)\), signifying ‘one’, or an undirected line \((-)\), signifying ‘many’, between the relationship set and the entity set.

Let us now see the notion of participation.

Total participation - every entity in the entity set participates in at least one relationship in the relationship set. This is indicated using a double line in the ER diagram.

Partial participation - some entities may not participate in any relationship in the relationship set.

We can represent more complex constraints using the following notation. A line may have an associated minimum and maximum cardinality, shown in the form ‘l..h’. A minimum value of 1 indicates total participation. A maximum value of 1 indicates that the entity participates in at most one relationship. A maximum value of * indicates no limit.

How do we represent cardinality constraints in Ternary relationships? We allow at most one arrow out of a ternary (or greater degree) relationship to indicate a cardinality constraint. For instance, consider a ternary relationship R between A, B and C with arrows to B, then it indicates that each entity in A is associated with at most one entity in B for an entity in C.

Now, if there is more than one arrow, the understanding is ambiguous. For example, consider the same setup from the previous example. If there are arrows to B and C, it could mean

- Each entity in A is associated with a unique entity from B and C, or

- Each pair of entities from (A, B) is associated with a unique C entity, and each pair (A, C) is associated with a unique entity in B.

Due to such ambiguities, more than one arrows are typically not used.

Primary key for Entity Sets

By definition, individual entities are distinct. From the database perspective, the differences are expressed in terms of their attributes. A key for an entity is a set of attributes that suffice to distinguish entities from each other.

Primary key for Relationship Sets

To distinguish among the various relationships of a relationship set we use the individual primary keys of the entities in the relationship set. That is, for a relationship set \(R\) involving entity sets \(E_1, E_2, \dots, E_n\), the primary key is given by the union of the primary keys of \(E_1, E_2, \dots, E_n\). If \(R\) is associated with any attributes, then the primary key includes those too. The choice of the primary key for a relationship set depends on the mapping cardinality of the relationship set.

Note. In one-to-many relationship sets, the primary key of the many side acts as the primary key of the relationship set.

Weak Entity Sets

Weak entity set is an entity set whose existence depends on some other entity set. For instance, consider the section and course entity set. We cannot have a section without a course - an existence dependency. What if we use a relationship set to represent this? This is sort of redundant as both section and course have the course ID as an attribute. Instead of doing this, we can say that section is a weak entity set identified by course.

In ER diagrams, a weak entity set is depicted via a double rectangle. We underline the discriminator of a weak entity set with a dashed line, and the relationship set connecting the weak entity set (using a double line) to the identifying strong entity set is depicted by a double diamond. The primary key of the strong entity set along with the discriminators of the weak entity set act as a primary key for the weak entity set.

Every weak entity set must be associated with an identifying entity set. The relationships associating the weak entity set with the identifying entity set is called the identifying relationship. Note that the relational schema we eventually create from the weak entity set will have the primary key of the identifying entity set.

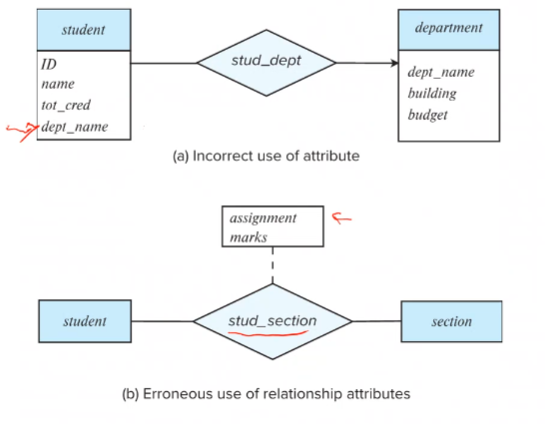

Redundant Attributes

Sometimes we often include redundant attributes while associating two entity sets. For example, the attribute course_id was redundant in the entity set section. However, when converting back to tables, some attributes get reintroduced.

Reduction to Relation Schemas

Entity sets and relationship sets can be expressed uniformly as relation schemas that represent the content of the database. For each entity set and relationship set there is a unique schema that is assigned the name of the corresponding entity set or relationship set. Each schema has a number of columns which have unique names.

- A strong entity set reduces to a schema with the same attributes.

- A weak entity set becomes a table that includes a column for the primary key of the identifying strong entity set.

- Composite attributes are flattened out by creating separate attribute for each component attribute.

- A multivalued attribute \(M\) of an entity \(E\) is represented by a separate schema \(EM\). Schema \(EM\) has attributes corresponding to the primary key of \(E\) and an attribute corresponding to multivalued attribute \(M\).

- A many-to-many relationship set is represented as a schema with attributes for the primary keys of the two participating entity sets, and any descriptive attributes of the relationships set.

- Many-to-one and one-to-many relationship sets that are total on the many-side can be represented by adding an extra attribute to the ‘many’ side. If it were not total, null values would creep up. It is better to model such relationships as many-to-many relationships so that the model needn’t be changed when the cardinality of the relationship is changed in the future.

Extended ER Features

Specialization - Overlapping and Disjoint; Total and partial.

How do we represent this in the schema? Form a schema for the higher-level entity and for the lower-level entity set. Include the primary key of the higher level entity set and local attributes in that of the local one. However, the drawback of such a construction is that we need to access two relations (higher and then lower) to get information.

Generalization - Combine a number of entity sets that share the same features into a higher-level entity set.

Completeness constraint specifies whether or not an entity in the higher-level entity set must belong to at least on of the lower-level entity sets within a generalization (total and partial concept). Partial generalization is the default.

Aggregation can also be represented in the ER diagrams.

- To represent aggregation, create a schema containing - primary key of the aggregated relationship, primary key of the associated entity set, and any descriptive attributes.

I don’t understand aggregation

Design issues

Lecture 12

27-01-22

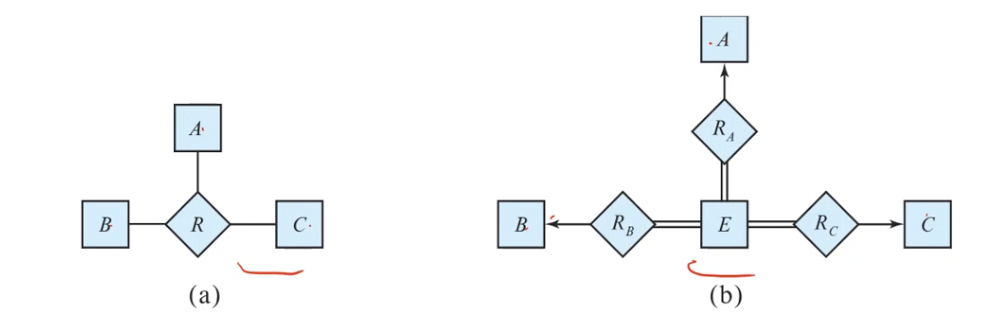

Binary vs. Non-Binary Relationships

We had discussed this previously in this section. In general, any non-binary relationship can be represented using binary relationships by creating an artificial entity set. We do this by replacing \(R\) between entity sets \(A, B, C\) by an entity set \(E\), and three relationship sets \(R_i\) relating \(E\) and \(i \in \{A, B, C\}\). We create an identifying attribute for E and add any attributes of \(R\) to \(E\). For each relationship \((a_i, b_i, c_i)\) in \(R\), we

- create a new entity \(e_i\) in the entity set \(E\)

- add \((e_i, j_i)\) to \(R_j\) for \(j \in \{A, B, C\}\)

We also need to translate constraints (which may not be always possible). There may be instances in the translated schema that cannot correspond to any instance of \(R\). We can avoid creating an identifying attribute for \(E\) by making it a weak entity set identified by the three relationship sets.

ER Design Decisions

Important Points

- A weak entity set can be identified by multiple entity sets.

- A weak entity set can be identified by another weak entity set (indirect identification).

- In SQL, the value in a foreign key attribute can be null (the attribute of the relation having the fks constraint).

UML

The Unified Modeling Language has many components to graphically model different aspects of an entire software system. The ER diagram notation we studied was inspired from the UML notation.

~Chapter 7: Functional Dependencies

When programmers usually skip the design phase, they run into problems with their relational database. We shall briefly mention these problems and see the motivation for this chapter.

A good relational design does not have repetition of information, and no unnecessary null values. The only way to avoid the repetition of information is to decompose a relation to different schemas. However, all decompositions are not lossless.

Lecture 13

31-01-22

The term lossy decomposition does not imply loss of tuples but rather the loss of information (relation) among the tuples. How do we formalise this idea?

Lossless Decomposition - 1

Let \(R\) be a relations schema and let \(R_1\) and \(R_2\) form a decomposition of \(R = R_1 \cup R_2\). A decomposition if lossless if there is no loss of information by replacing \(R\) with the two relation schemas \(R_1, R_2\). That is,

\[\pi_{R_1}(r) \bowtie \pi_{R_2}(r) = r\]Note that this relations must hold for all instances to call the decomposition lossless. And, conversely a decomposition is lossy if

\[r \subset \pi_{R_1}(r) \bowtie \pi_{R_2}(r)\]We shall see the sufficient condition in a later section.

Normalization theory

We build the theory of functional/multivalued dependencies to decide whther a particular relation is in a “good” form.

Functional Dependencies

An instance of a relations that satisfies all such real-world constriants is called a legal instance of the relation. A functional dependency is a generalization of the notion of a key.

Let \(R\) be a relation schema and \(\alpha, \beta \subseteq R\). The functional dependency \(\alpha \to \beta\) holds on \(R\) iff for any legal relations \(r(R)\) whenever two tuples \(t_1, t_2\) of \(r\) agree on the attributes \(\alpha\), they also agree on the attributes \(\beta\). That is,

\[\alpha \to \beta \triangleq t_1[\alpha] = t_2[\alpha] \implies t_1[\beta] = t_1[\beta]\]Closure properties

If \(A \to B\) and \(B \to C\) Then \(A \to C\). The set of all functional dependencies logically implied by a functional dependency set \(F\) is the closure of \(F\) denoted by \(F^+\).

Keys and Functional Dependencies

\(K\) is a superket for relation schema \(R\) iff \(K \to R\). \(K\) is a candidate key for \(R\) iff

- \(K \to R\) and

- for no \(A \subset K\), \(A \to R\)

Functional dependencies allow us to express constraints that cannot be expressed using super keys.

Use of functional dependencies

We use functional dependencies to test relations to see if they are legal and to specify constraints on the set of legal relations.

Note. A specific instance of a relation schema may satisfy a functional dependency even if that particular functional dependency does not hold across all legal instances.

Lossless Decomposition - 2

A decomposition of \(R\) into \(R_1\) and \(R_2\) is lossless decomposition if at least one of the following dependecnies is in \(F^+\)

- \[R_1 \cap R_2 \to R_1\]

- \[R_1 \cap R_2 \to R_2\]

The above functional dependencies are a necessary condition only if all constraints are functional dependencies.

Dependency Preservation

Testing functional dependency constrinats each time the database is updated can be costly. If testing a functional dependency can be done by considering just one relation, then the cost of testing this constraint is low. A decomposition that makes it computaitonally hard to enforce functional dependencies is sat to be not dependency preserving.

Lecture 14

01-02-22

Boyce-Codd Normal Form

There are a few designs of relational schema which prevent redundancies and have preferable properties. One such design format is BCNF.

A relation schema \(R\) is in BCNF with respect to a set \(R\) of functional dependencies if for all functional dependencies in \(F^+\) of the form \(\alpha \to \beta\) where \(\alpha, \beta \subseteq R\), at least one of the following holds

- \(\alpha \to \beta\) is trivial (\(\beta \subseteq \alpha\))

- \(\alpha\) is a superkey for \(R\).

Let \(R\) Be a schema \(R\) That is not in BCNF. Let \(\alpha \to \beta\) Be the FD that causes a violation of BCNF. Then, to convert \(R\) to BCNF we decompose it to

- \[\alpha \cup \beta\]

- \[R - (\beta - \alpha)\]

Example. Consider the relation \(R= (A, B, C)\) with \(F \{A \to B, B \to C\}\). Suppose we have the following decompositions

- \[R_1 = (A, B), R_2 = (B, C)\]

This decompositions is lossless-join and also dependency preserving. Notice that it is dependency preserving even though we have the \(A \to C\) constraint. This is because \(A \to C\) is implied from the other two constraints.

- \[R = (A, B), R_2 = (A, C)\]

This decomposition is lossless but is not dependency preserving.

BCNF and Dependency Preservation

It is not always possible to achieve both BCNF and dependency preservation.

Third Normal Form

This form is useful when you are willing to allow a small amount of data redundancy in exchange for dependency preservation.

A relations \(R\) Is in third normal form (3NF) if for all \(\alpha \to \beta \in F^+\) at least one of the following holds

- \(\alpha \to \beta\) is trivial

- \(\alpha\) is a super key for \(R\)

- Each attribute \(A\) In \(\beta - \alpha\) is contained in a candidate key for \(R\).

There are 1NF and 2NF forms but they are not very important. If a relation is in BCNF, then it is in 3NF.

Redundancy in 3NF

Consider \(R\) which is in 3NF \(R = (J, K, L)\) and \(F = \{JK \to L, L \to K \}\). Then, we can have the following instance

| J | K | L |

|---|---|---|

| p1 | q1 | k1 |

| p2 | q1 | k1 |

| p3 | q1 | k1 |

| null | q2 | k2 |

3NF and Dependency Preservation

It is always possible to obtain a 3NF design without sacrificing losslessness or dependency preservation. However, we may have to use null values (like above) to represent some of the possible meaningful relationships among data items.

Goals of Normalisation

A “good” schema consists of lossless decompositions and preserved dependencies. We can use 3NF and BCNF (preferable) for such purpose.

There are database schemas in BCNF that do not seem to be sufficiently normalised. Multivalued dependencies, Insertion anomaly, …

Functional Dependency Theory

Closure of a set of functional dependencies

We can compute \(F^+\) from \(F\) by repeatedly applying Armstrong’s Axioms

- Reflexive rule - If \(\beta \subseteq \alpha\), then \(\alpha \to \beta\)

- Augmentation rule - If \(\alpha \to \beta\), then \(\gamma\alpha \to \gamma\beta\)

- Transitivity rule - If \(\alpha \to \beta\) and \(\beta \to \gamma\) then \(\alpha \to \gamma\)

It is trivial to see that these rules are sound. However, showing that these rules are complete is much more difficult.

Additional rules include (can be derived from above)-

- Union rule - If \(\alpha \to \beta\) and \(\alpha \to \gamma\), then \(\alpha \to \beta\gamma\)

- Decomposition rule - If \(\alpha \to \beta\gamma\), then \(\alpha \to \beta\)

- Pseudo-transitivity rule - If \(\alpha \to \beta\) and \(\gamma\beta \to \delta\), then \(\alpha \gamma \to \delta\)

Closure of Attribute Sets

Given a set of attributes \(\alpha\), define the closure of \(\alpha\) under \(F\) (denoted by \(\alpha^+\)) as the set of attributes that are functionally determined by \(\alpha\) under \(F\). We use the following procedure to compute the closure of A

result := A

while (change) do

for each beta to gamma in F do

begin

if beta in result then result = result union gamma

end

The time complexity of this algorithm is \(\mathcal O(n^3)\) where \(n\) is the number of attributes.

There are several uses of the attribute closure algorithm

- To test if \(\alpha\) is a superkey, we compute \(\alpha\) and check if it contains all attributes of the relation

- To check if a functional dependency \(\alpha \to \beta\) holds, see if \(\beta \subseteq \alpha^+\)

- For computing the closure of \(F\). For each \(\gamma \subseteq R\), we find \(\gamma\) and for each \(S \subset \gamma^+\), we output a functional dependency \(\gamma \to S\).

Canonical Cover

A Canonical cover of a functional dependency set \(F\) is the minimal set of functional dependencies such that its closure is \(F^+\).

An attribute of a functional dependency in \(F\) is extraneous if we can remove it without changing \(F^+\). Removing an attribute from the left side of a functional dependency will make it a stronger constraint.

Lecture 15

05-02-22

Extraneous attributes

Removing an attribute from the left side of a functional dependency makes it a stronger constraint. Attribute A is extraneous in \(\alpha\) if

- \[A \in \alpha\]

- \(F\) logically implies \((F - \{\alpha \to \beta\}) \cup \{(\alpha - A) \to \beta\}\)

To test this, consider \(\gamma = \alpha - \{A\}\). Check if \(\gamma \to \beta\) can be inferred from \(F\). We do this by computing \(\gamma^+\) using the dependencies in \(F\), and if it includes all attributes in \(\beta\) then \(A\) is extraneous in \(\alpha\).

On the other hand, removing an attribute from the right side of a functional dependency could make it a weaker constraint. Attribute A is extraneous in \(\beta\) if

- \[A \in \beta\]

-

The set of functional dependencies

\((F - \{\alpha \to \beta\}) \cup \{\alpha \to ( \beta - A)\}\) logically implies \(F\).

To test this, consider \(F’ = (F - \{\alpha \to \beta\}) \cup \{\alpha \to ( \beta - A)\}\) and check if \(\alpha^+\) contains A; if it does, then \(A\) is extraneous in \(\beta\).

Canonical Cover Revisited

A canonical cover for \(F\) is a set of dependencies \(F_c\) such that

- \(F\)(\(F_c\)) logically implies all dependencies in \(F_c\)(\(F\))

- No functional dependency in \(F_c\) contains an extraneous attribute

- Each left side of functional dependency in $$F_c$$ is unique

To compute a canonical cover for \(F\) do the following

Fc = F

repeat

Use the union rule to replace any dependencies in Fc of the form

alpha -> beta and alpha -> gamma with

alpha -> beta gamma

Find a functional dependency alpha to beta

in Fc with an extraneous attribute

in alpha or in beta

Delete extraneous attribute if found

until Fc does not change

Dependency Preservation - 3

Let \(F_i\) be the set of dependencies \(F^+\) that include only the attribtues in \(R_i\). A decomposition is dependency preserving if \((F_1 \cup \dots \cup F_n)^+ = F^+\). However, we can’t use this definition to test for dependency preserving as it takes exponential time.

We test for dependency preservation in the following way. To check if a dependency \(\alpha \to \beta\) is preserved in a decomposition, apply the following test

result = alpha

repeat

for each Ri in the decomposition

t = (result cap Ri)^+ cap Ri

result = result \cup t

until result does not change

If the result contains all attributes in \(\beta\), then the functional dependency \(\alpha \to \beta\) is preserved. This procedure takes polynomial time.

Testing for BCNF

To check if a non-trivial dependency \(\alpha \to \beta\) cause a violation of BCNF, compute \(\alpha^+\) and verify that it includes all attributes of \(R\). Another simpler method is to check only the dependencies in the given set \(F\) for violations of BCNF rather than checking all dependencies in \(F^+\). If none of the dependencies in \(F\) cause a violation, then none of the dependencies in \(F^+\) will cause a violation. Think.

However, the simplified test using only \(F\) is incorrect when testing a relation in a decomposition of \(R\). For example, consider \(R = (A, B, C, D, E)\), with \(F = \{ A \to B, BC \to D\}\) and the decomposition \(R_1 = (A, B), R_2 = (A, C, D, E)\). Now, neither of the dependencies in \(F\) contain only attributes from \((A, C, D, E)\) so we might be misled into thinking \(R_2\) satisfies BCNF.

Therefore, testing decomposition requires the restriction of \(R^+\) to that particular set of tables. If one wants to the use the original set of dependencies \(F\), then they must check that \(\alpha^+\) either includes no attributes of \(R_i - \alpha\) or includes all attributes of \(R_i\) for every set of attributes \(\alpha \subseteq R\). If a condition \(\alpha \to \beta \in F^+\) violates BCNF, then the dependency \(\alpha \to (\alpha^+ - \alpha) \cap R_i\) can be shown to hold on \(R_i\) and violate BCNF.

In conclusion, the BCNF decomposition algorithm is given by

result = R

done = false

compute F+

while (not done) do

if (there is a schema Ri in result that is not in BCNF)

let alpha to beta be a nontrivial functional dependency

that holds on Ri such that alpha to Ri

is not in F+

and alpha cap beta is null

result = {(result - Ri),(Ri - beta),(alpha, beta)}

else done = true

Note that each \(R_i\) is in BCNF and decomposition is lossless-join.

3NF

The main drawback of BCNF is that is may not be dependency preserving. Through 3NF, we allow some redundancy to acquire dependency preserving along with lossless join.

To test for 3NF, we only have to check the FDs in \(F\) and not all the FDs in \(F^+\).We use attribute closure to check for each dependency \(\alpha \to \beta\), if \(\alpha\) is a super key. If \(\alpha\) is not a super key, we need to check if each attribute in \(\beta\) is contained in a candidate key of \(R\).

However, this test is shown to be NP-hard, but the decomposition into third normal form can be done in polynomial time.

Doesn’t decomposition imply testing? No, one relation can have many 3NF decompositions.

3NF Decomposition Algorithm

Let Fc be a canconical cover for F;

i = 0;

/* initial schema is empty */

for each FD alpha to beta in Fc do

if none of the schemas Rj (j <= i) contains alpha beta

then begin

i = i + 1

Ri = alpha beta

end

/* Here, each of the FDs will be contained in one of the Rjs */

if none of the schemas Rj (j <= i) contains a candidate key for R

then begin

i = i + 1

Ri = any candidate key for R

end

/* Here, there is a relation contianing the candidate key of R */

/* Optionally remove redundant relations */

repeat

if any schema Rj is contained in another schema Rk

then delete Rj

Rj = Ri;

i = i - 1

return (R1, ..., Ri)

Guaranteeing that the above set of relations are in 3NF is the easy part. However, proving that the decomposition is lossless is difficult.

Comparison of BCNF and 3NF

3NF has redundancy whereas BCNF may not be dependency preserving. The bigger problem is 3Nf allows certain function dependencies which are not super key dependencies. However, none of the SQL implementations today support such FDs.

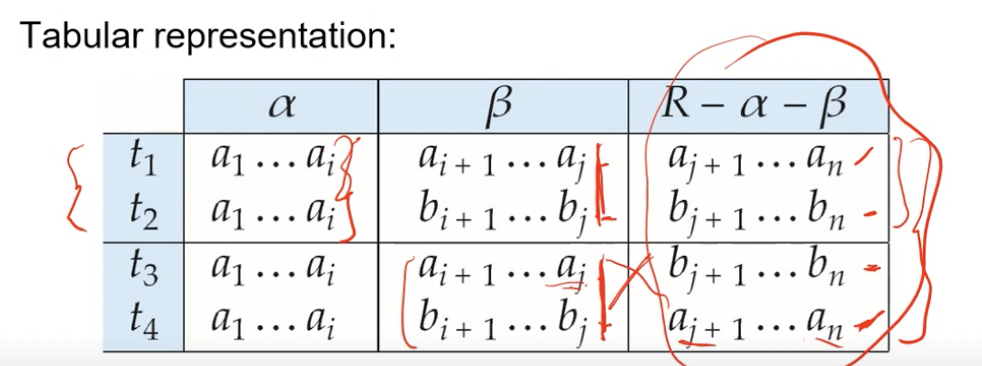

Multivalued Dependencies

Let \(R\) be a relation schema and let \(\alpha, \beta \subseteq R\) . The multivalued dependency

\[\alpha \to\to \beta\]holds on \(R\) if in any legal relation \(r(R)\), for all pairs for tuples \(t_1, t_2\) in \(r\) such that \(t_1[\alpha] = t_2[\alpha]\), there exists tuples \(t_3\) and \(t_4\) in \(r\) such that

\[t_1[\alpha] = t_2[\alpha] = t_3[\alpha] = t_4[\alpha] \\ t_3[\beta] = t_1[\beta] \\ t_3[R - \beta] = t_2[R - \beta] \\ t_4[\beta] = t_2[\beta] \\ t_4[R - \beta] = t_1[R - \beta]\]Intuitively, it means that the relationship between \(\alpha\) and \(\beta\) is independent of the remaining attributes in the relation. The tabular representation of these conditions is given by

The definition can also be mentioned in a more intuitive manner. Consider the attributes of \(R\) that are partitioned into 3 nonempty subsets \(W, Y, Z\). We say that \(Y \to\to Z\) iff for all possible relational instances \(r(R)\),

\[<y_1, z_1, w_1> \in r \text{ and } < y_1, z_2, w_2 > \in r \\ \implies \\ <y_1, z_1, w_2 > \in r \text{ and } <y_1, z_2, w_1 > \in r\]Important Points-

- If \(Y \to\to Z\) then \(Y \to\to W\)

- If \(Y \to Z\) then \(Y \to\to Z\)

why?

The closure \(D^+\) of \(D\) is the set of all functional and multivalued dependencies logically implied by \(D\). We are not covering the reasoning here.

Fourth Normal Form

A relation schema \(R\) is in 4NF rt a set \(D\) of functional and multivalued dependencies if for all multivalued dependencies in \(D^+\) in the form \(\alpha \to\to \beta\), where \(\alpha, \beta \subseteq R\), at least one of the following hold

- \(\alpha \to\to \beta\) is trivial

- \(\alpha\) is a super key for schema \(R\)

If a relation is in 4NF, then it is in BCNF. That is, 4NF is stronger than BCNF. Also, 4NF is the generalisation of BCNF for multivalued dependencies.

Restriction of MVDs

The restriction of \(D\) to \(R_i\) is the set \(D_i\) consisting of

- All functional dependencies in \(D^+\) that include only attributes of \(R_i\)

- All multivalued dependencies of the form \(\alpha \to \to (\beta \cap R_i)\) where \(\alpha \in R_i\) and \(\alpha \to\to \beta \in D^+\).

4NF Decomposition Algorithm

result = {R};

done = false;

compute D+

Let Di denote the restriction of D+ to Ri

while(not done)

if (there is a schema Ri in result not in 4NF)

let alpha to to beta be a nontrivial MVD that holds

on Ri such that alpha to Ri is not in Di and

alpha cap beta is null

result = {result - Ri, Ri - beta, (alpha, beta)}

else done = true

This algorithm is very similar to that of BCNF decomposition.

Lecture 16

07-02-22

Further Normal Forms

Join dependencies generalise multivalued dependencies and lead to project-join normal form (PJNF) also known as 5NF. A class of even more general constraints leads to a normal form called domain-key normal form. There are hard to reason with and no set of sound and complete set of inference rules exist.

Overall Database Design Process

We have assumed \(R\) is given. In real life, we can get it based on applications through ER diagrams. However, one can consider \(R\) to be generated from a single relations containing all attributes that are of interest (called universal relation). Normalisation breaks this \(R\) into smaller relations.

Some aspects of database design are not caught by normalisation. For example, a crosstab, where values for on attribute become column names, is not captured by normalisation forms.

Modeling Temporal Data

Temporal data have an associated time interval during which the data is valid. A snapshot is the value of the data at a particular point in time. Adding a temporal component results in functional dependencies being invalidated because the attribute values vary over time. A temporal functional dependency \(X \xrightarrow{\tau} Y\) holds on schema \(R\) if the functional dependency \(X \to Y\) holds on all snapshots for all legal instances \(r(R)\).

In practice, database designers may add start and end time attributes to relations. SQL standard [start, end). In modern SQL, we can write

period for validtime (start, end)

primary key (course_id, validtime without overlaps)

~Chapter 8: Complex Data Types

Expected to read from the textbook.

- Semi-Structured Data

- Object Orientation

- Textual Data

- Spatial Data

Semi-Structured Data

Many applications require storage of complex data, whose schema changes often. The relational model’s requirement of atomic data types may be an overkill. JSON (JavaScript Object Notation) and XML (Extensible Markup Language) are widely used semi-structured data models.

Flexible schema

- Wide column representation allow each tuple to have a different set of attributes and can add new attributes at any time

- Sparse column representation has a fixed but large set of attributes but each tuple may store only a subset.

Multivalued data types

- Sets, multi-sets

- Key-value map

- Arrays

- Array database

JSON

It is a verbose data type widely used in data exchange today, There are efficient data storage variants like BSON

Knowledge Representation

Representation of human knowledge is a long-standing goal of AI. RDF: Resource Description Format is a simplified representation for facts as triples of the form (subject, predicate, object). For example, (India, Population, 1.7B) is one such form. This form has a natural graph representation. There is a query language called SparQL for this representation. Linked open data project aims to connect different knowledge graphs to allow queries to span databases.

To represent n-ary relationships, we can

- Create an artificial entity and link to each of the n entities

- Use quads instead of triples with context entity

Object Orientation

Object-relational data model provides richer type system with complex data types and object orientation. Applications are often written in OOP languages. However, the type system does not match relational type system and switching between imperative language and SQL is cumbersome.

To use object-orientation with databases, we could build an object-relational database, adding object-oriented features to a relational database. Otherwise, we could automatically convert data between OOP and relational model specified by object-relational mapping. Object-oriented database is another option that natively supports object-oriented data and direct access from OOP. The second method is widely used now.

Object-Relational Mapping

ORM systems allow

- specification of mapping between OOP objects and database tuples

- Automatic modification of database

- Interface to retrieve objects satisfying specified conditions

ORM systems can be used for websites but not for data-analytics applications!

Textual Data

Information retrieval basically refers to querying of unstructured data. Simple model of keyword queries consists of fetching all documents containing all the input keywords. More advanced models rank the relevance of documents.

Ranking using TF-IDF

Term is a keyword occurring in a document query. The term frequency \(TF(d, t)\) is the relevance of a term \(t\) to a document \(d\). It is defined by

\[TF(d, t) = \log( 1+ n(d, t)/n(d))\]where \(n(d, t)\) is the number of occurrences of term \(t\) in document \(d\) and \(n(d)\) is the number of terms in the document \(d\).

The inverse document frequency \(IDF(t)\) is given by

\[IDF(t) = 1/n(t)\]This is used to give importance to terms that are rare. Relevance of a document \(d\) to a set of terms \(Q\) can be defined as

\[r(d, Q) = \sum_{t \in Q} TF(d, t)*IDF(t)\]There are other definitions that take proximity of words into account and stop words are often ignored.

Lecture 17

08-02-22

The TF-IDF method in search engine was did not work out as web designers added repeated occurrences of words on their website to increase the relevance. There were plenty of shady things web designers could do in order to increase the page relevance. To prevent this problem, Google introduced the model of PageRank.

Ranking using Hyperlinks

Hyperlinks provide very important clues to importance. Google’s PageRank measures the popularity/importance based on hyperlinks to pages.

- Pages hyperlinked from many pages should have higher PageRank

- Pages hyperlinked from pages with higher PageRank should have higher PageRank

This model is formalised by a random walk model. Let \(T[i, j]\) be the probability that a random walker who is on page \(i\) will click on the link to page \(j\). Then, PageRank[j] for each page \(j\) is defined as

\[P[j] = \delta/N + (1 - \delta)*\sum_{i = 1}^n(T(i, j)*P(j))\]where \(N\) is the total number of pages and \(\delta\) is a constant usually set to \(0.15\). As the number of pages are really high, some sort of bootstrapping method (Monte Carlo simulation) is used to approximate the PageRank. PageRank also can be fooled using mutual link spams.

Retrieval Effectiveness

Measures of effectiveness

- Precision - what % of returned results are actually relevant.

- Recall - what percentage of relevant results were returned

Spatial Data

Not covered

~Chapter 9: Application Development

HTTP and Sessions

The HTTP protocol is connectionless. That is, once the server replied to a request, the server closes the connection with the client, and forgets all about the request. The motivation to this convention is that it reduces the load on the server. The problem however is authentication for every connection. Information services need session information to acquire user authentication only once per session. This problem is solved by cookies.

A cookie is a small piece of text containing identifying information

- sent by server to browser on first interaction to identify session

- sent by browser to the server that created the cookie on further interactions (part of the HTTP protocol)

- Server saved information about cookies it issued, and can use it when serving a request. E.g, authentication information, and user preferences

Cookies can be stored permanently or for a limited time.

Java Servlet defines an API for communication between the server and app to spawn threads that can work concurrently.

Web Services

Web services are basically URLs on which we make a request to obtain results.

Till HTML4, local storage was restricted to cookies. However, this was expanded to any data type in HTML5.

HTTP and HTTPS

The application server authenticates the user by the means of user credentials. What if a hacker scans all the packets going to the server to obtain a user’s credentials? So, HTTPS was developed to encrypt the data sent between the browser and the server. How is this encryption done? The server and the browser need to have a common key. Turns out, there are crypto techniques that can achieve the same.

What if someone creates a middleware that simulates a website a user uses? This is known as man in the middle attack. How do we know we are connected to the authentic website? The basic idea is to have a public key of the website and send data encrypted via this public key. Then, the website uses its own private key to decrypt the data. This conversion is reversible. As in, the website encrypts the data using its own private key which can be decoded by the user using the public key.

How do we get public keys for millions of websites out there? We use digital certificates. Let’s say we have a website’s public key and that website has the public key of the user (via their website). The website then encrypts the user’s public key using its private key to generate a digital certificate. This digital certificate can be advertised on the user’s website to allow other users to check the authenticity of the user’s website. Now, another user can obtain this certificate, decrypt it using the first website’s private key, and verify the authenticity of the user’s webpage. These verifications are maintained as a hierarchical structure to maintain digital certificates of millions of websites.

Cross Site Scripting

In cross site scripting, the user’s session for one website is used in another website to execute actions at the server of the first website. For example, suppose a bank’s website, when logged in, allows the user to transfer money by visiting the link xyz.com/?amt=1&to=123. If another website has a similar link (probably for displaying an image), then it can succeed in transferring the amount if the user is still logged into the bank. This vulnerability is called called cross-site scripting (XSS) or cross-site request forgery (XSRF/CSRF). XSRF tokens are a form of cookies that are used to check these cross-site attacks (CORS from Django).

Lecture 18

14-02-22

Application Level Authorisation

Current SQL standard does not allow fine-grained authorisation such as students seeing their own grades but not others. Fine grained (row-level) authorisation schemes such as Oracle Virtual Private Database (VPD) allows predicates to be added transparently to all SQL queries.

~Chapter 10: Big Data

Data grew in terms of volume (large amounts of data), velocity (higher rates of insertions) and variety (many types of data) in the recent times. This new generation of data is known as Big Data.

Transaction processing systems (ACID properties) and query processing systems needed to be made scalable.

Distributed File Systems

A distributed file system stores data across a large collection of machines, but provides a single file-system view. Files are replicated to handle hardware failure, and failures were to be detected and recovered from.

Hadoop File System Architecture

A single namespace is used for an entire cluster. Files are broken up into blocks (64 MB) and replicated on multiple DataNodes. A client finds the location of blocks from NameNode and accesses the data from DataNodes.

The key idea of this architecture is using large block sizes for the actual file data. This way, the metadata would be reduced and the NameNode can store the DataNodes info in a much more scalable manner.

Distributed file systems are good for millions of large files. However, distributed file systems have very high overheads and poor performance with billions of smaller tuples. Data coherency also needs to be ensured (write-once-read-many access model).

Sharding

It refers to partitioning data across multiple databases. Partitioning is usually done on some partitioning attributes known as partitioning keys or shard keys. The advantage to this is that it scales well and is easy to implement. However, it is not transparent (manually writing all routes and queries across multiple databases), removing load from an overloaded database is not easy, and there is a higher change of failure. Sharding is used extensively by banks today.

Key Value Storage Systems

These systems store large numbers of small sized records. Records are partitioned across multiple machines, and queries are routed by the system to appropriate machine. Also, the records are replicated across multiple machines to ensure availability. Key-value stores ensure that updates are applied to all replicas to ensure consistency.

Key-value stores may have

- uninterpreted bytes with an associated key

- Wide-column with associated key

- JSON