Operating System Notes

Lecture 1 - Introduction

What is an operating system?

An operating system is a middleware between user programs and system hardware.

| User Programs |

|---|

| OS |

| Hardware: CPU, Memory, Disk, I/O |

An operating system manages hardware: CPU, Main Memory, IO devices (disk, network, card, mouse, keyboard, etc.)

What happens when you run a program? (Background)

A compiler translated high level programs into an executable (“.c” to “a.out”)

The executable contains instructions that the CPU can understand and the program’s data (all numbered with addresses).

Instructions run on CPU: hardware implements an Instruction Set Architecture (ISA).

CPU also consists of a few registers, e.g.,

- Pointer to current instruction (PC)

- Operands of the instructions, memory addresses

To run an exe, the CPU does the following:

- Fetches instruction ‘pointed at’ by PC from memory

- Loads data required by the instructions into registers

- Decodes and executes the instruction

- Stores the results to memory

Most recently used instructions and data are in CPU cache (instruction cache and data cache) for faster access.

What does the OS do?

OS manages CPU

It initializes program counter (PC) and other registers to begin execution. OS provides the process abstraction.

Process: A running program

OS creates and manages processes. Each process has the illusion of having the complete CPU, i.e., OS virtualizes CPU. It timeshares the CPU between processes. It also enables coordination between processes.

OS manages memory

It loads the program executable (code, data) from disk to memory. It has to manage code, data, stack, heap, etc. Each process thinks it has a dedicated memory space for itself, numbers code, and data starting from 0 (virtual addresses).

The operating system abstracts out the details of the actual placement in memory, translates from virtual addresses to real physical addresses.

Hence, the process does not have to worry about where its memory is allocated in the physical space.

OS manages devices

OS helps in reading/writing files from the disk. OS has code to manage disk, network card, and other external devices: device drivers.

Device driver: Talks the language of the hardware devices.

It issues instructions to devices (fetching data from a file). It also responds to interrupt events from devices (pressing a key on the keyboard).

The persistent (ROM) data is organised as a file system on the disk.

Design goals of an operating system

- Convenience, abstraction of hardware resources for user programs.

- Efficiency of usage of CPU, memory, etc.

- Isolation between multiple processes.

History of operating systems

OS started out as a library to provide common functionality across programs. Later, it evolved from procedure calls to system calls.

When a system call is made to run OS code, the CPU executes at a higher privilege level.

OS evolved from running a single program to executing multiple processes concurrently.

Lecture 2 - The Process Abstraction

An operating system provides process abstraction. That is, when you execute a program, the OS creates a process. It timeshares CPU across multiple processes (virtualizing CPU). An OS also has a CPU scheduler that picks one of the many active processes to execute on a CPU. This scheduler has 2 components.

- Policy - It decides which process to run on the CPU

- Mechanism - How to “context-switch” between processes

What constitutes a process?

Every process has a unique identifier (PID) and a memory image - the fragments of the program present in the memory. As mentioned earlier, a memory image has 4 components (code, data, stack, and heap).

When a process is running, a process also has a CPU context (registers). This has components such as program counter, current operands, and stack pointer. These basically store the state of the process.

A process also has File descriptors - pointers to open files and devices.

How does an OS create a process?

Initially, the OS allocates and creates a memory image. It loads the code and data from the disk executable. Then, it makes a runtime stack and heap.

After this, the OS opens essential files (STD IN, STD OUT, STD ERR). Then the CPU registers are initialized, and the PC points to the first instruction.

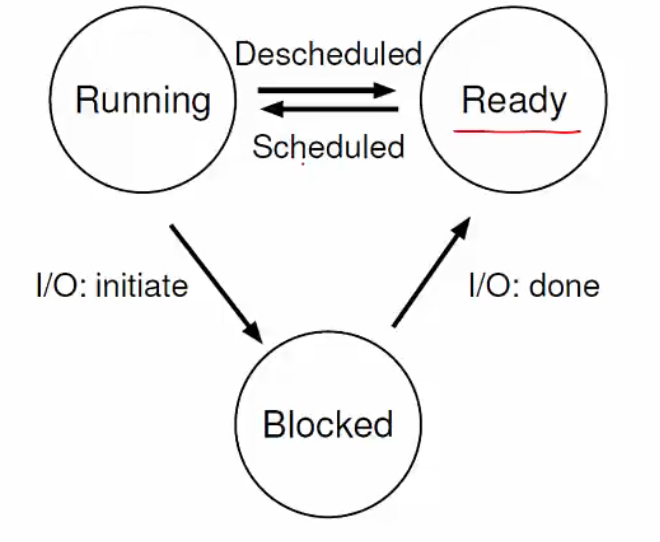

States of a process

-

A process that is currently being executed in the CPU is Running.

-

Processes that are waiting to be scheduled are Ready. These are not yet executed.

-

Some processes may be in the Blocked state. They are suspended and not ready to run. These processes may be waiting for some event, e.g., waiting for input from the user. They are unblocked once an interrupt is issued.

-

New processes are being created and are yet to run.

-

Dead processes have finished executing and are terminated.

Process State Transitions

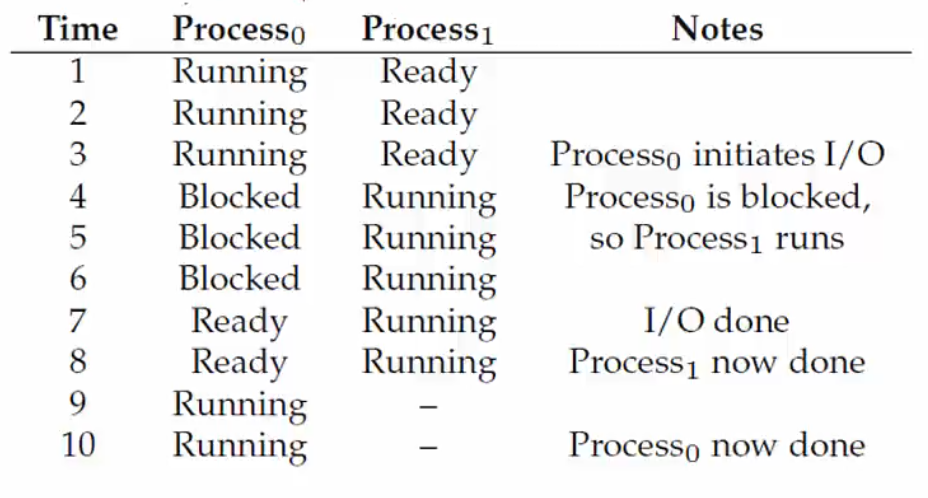

When one process is blocked, another process can be executed to utilize the resources effectively. Here is a simple example reflecting this situation.

|

|---|

| Example: Process States |

OS data structures

An operating system maintains a data structure of all active processes. One such structure is the process control block (PCB). Information about each process is stored in a PCB.

Different Operating Systems might use other names for this structure. PCB is the generic name. All the processes running on the system are stored in a list of PCBs.

A PCB has the following components of a process:

- Process identifier

- Process state

- Pointers to other related processes (parent)

- CPU context of the process (saved when the process is suspended)

- Pointers to memory locations

- Pointers to open files

Lecture 21 - xv6 introduction and x86 background

xv6 is a simple OS used for teaching OS concepts. We shall be using the x86 version of the OS.

An OS enables users to run the processed stored in memory on the CPU.

- It loads the process code/data in main memory.

- CPU fetches, decodes, executes instructions in program code.

- It fetches the process data from memory to CPU registers for faster access during instruction execution (We studied in Arch that memory access is expensive).

- Recently fetched code/data is stored in the CPU in the form of cache for future access.

Memory Image of a process

The memory image of a process consists of

- Compiled code (CPU instructions)

- Global/static variables (memory allocated at compile time)

- Heap (dynamic memory allocation via,

malloc,newetc) that grows (up) on demand. - Stack (temporary storage during function calls, e.g., local variables) that usually grows “up” towards lower addresses. It shrinks “down” as memory is freed (exiting function call).

- Other things like shared libraries.

Every instruction/data has an address, used by the CPU to fetch/store (Virtual addresses managed by OS). Consider the following example

int *iptr = malloc(sizeof(int))

Here, iptr itself is on stack but it points to 4 bytes (size of int) in the heap.

x86 registers

Registers are a small space for data storage with the CPU. Every CPU architecture has its set of registers used during computation. The names of these registers vary across different architectures. These are the common types of registers:

- General purpose registers - stores data during computations (

eax, ebx, ecx, edx, esi, edi). - Pointers to stack locations - base of stack (

ebp) and top of stack (esp). - Program counter or instruction pointer (

eip) - Next instruction to execute. - Control registers - Hold control information or metadata of a process (e.g.,

cr3has pointer to page table of the process). A page table helps the OS to keep track of the memory of the process. - Segment registers (

cs, ds, es, fs, gs, ss) - information about segments (related to memory of process).

x86 Instructions

Every CPU can execute a set of instructions defined in its ISA. The compiled code is written using this ISA so that the CPU can execute these instructions. Here are some common instructions used:

- Load/store -

mov src, dst(AT&T syntax - usingsrcbeforedst)-

mov %eax, %ebx- copy contents ofeaxtoebx -

mov (%eax), %ebx- copy contents at the address ineaxintoebx -

mov 4(%eax), %ebx- copy contents stores at offset of 4 bytes from address stored ateaxintoebx

-

- Push/pop on stack - changes

esp-

push %eax- push contents ofeaxonto stack, updateesp -

pop %eax- pop top of stack ontoeax, updateesp

-

-

jmpsetseipto a specified address -

callto invoke a function,retto return from a function - Variants of above (

movw, pushl) for different register sizes.

Privilege Levels

x86 CPUs have multiple privilege levels called rings (0 to 3). Ring 0 has the highest privilege and OS code runs at this level. Ring 3 has the lowest privilege and user code runs at this level.

There are two types of instructions - privileged and unprivileged. Privileged instructions perform sensitive operations which ideally should not be performed by user programs. These can be executed by the CPU only when running at the highest privilege level (ring 0) .

For example, writing into cr3 register (setting page table) is a privileged instruction. We don’t want a user manipulating memory of another process. instructions to access I/O devices is also privileged.

Unprivileged instructions can be run at lower privilege levels.

Even ring 0?

For example, user code running at a lower privilege level can store a value into a general purpose register.

When a user required OS services (system calls), the CPU moves to higher privilege level and executes OS code that contains privileged instructions. User code cannot invoke privileged instructions directly.

Function calls and the stack

Local variables and arguments are stored on stack for the duration of a function call. When a function is called:

- Arguments are pushed onto the stack.

-

call function- pushes the return address on stack and jumps to function. That is,eipshifts from top of stack to function implementation. - Local variables are allocated on stack

- Function code is executed

-

ret- instruction pops return address,eipgoes back to the old value.

What exactly is happening above?

In this way, stack acts as a temporary storage for function calls.

Before making a function call, we may have to store values of some registers. This is because, registers can get clobbered during a function call.

- Some registers are saved on stack by caller before invoking the function (caller save registers). The function code (callee) can freely change them, and the caller restores them later.

- Some registers are saved by callee, and are restored after function ends (callee save registers). Caller expects them to have same value on return.

- Return value stored in

eaxregister by callee (one of caller save registers)

All of the above is automatically done by the C compiler (C calling convention). Every language has a calling convention that decides which registers have to be classified as caller and callee.

Caller and Callee both store the registers?

Timeline of a function call is as follows (Note. Stack grows up from higher to lower addresses):

- Caller save registers are pushed (

eax, ecx, edx) - Arguments of the function are pushed in reverse order onto the stack

- The return address or the old

eipis pushed on stack by thecallinstruction - The old

ebpis also pushed onto the stack - Set

ebpto the current top of the stack (base of new “stack frame” of the function) - Push local variables and callee save registers (

ebx, esi, edi).espautomatically goes up as you push things onto the stack. - The function is executed.

- After the function execution, the current stack frame is popped to restore the old

ebp. - The return address is popped and

eipis restored by theretinstruction.

Stack pointers: ebp stores the address of base of the current stack frame and esp stores the address of current top of stack. This way, function arguments are accessible from looking under the stack base pointer.

C vs. assembly for OS code

Most of xv6 is in C! The assembly code is automatically generated by the compiler (including all the stack manipulations for function calls).

However, small parts of the OS are in assembly language. This is because, the OS needs more controls over what needs to be done in some situations. For example, the logic of switching from stack of one process to stack of another cannot be written in a high-level language.

Therefore, basic understanding of x86 assembly language is required to follow some nuances of xv6 code.

More on CPU hardware

Some aspects of CPU hardware that are not relevant to studying OS:

- CPU cache - CPU stores recently fetched instructions and data in multiple levels of cache. The operating system has no visibility or control intro the CPU cache.

- Hyper-threading - A CPU core can run multiple processed concurrently via hyper-threading. From an OS perspective, 4-core CPU with 2 hyper-threads per core, and 8-core CPU with no hyper-threading will look the same, even though the performance may differ. The OS will schedule processes in parallel on the 8 available processors.

Lecture 22: Processes in xv6

The process abstraction

The OS is responsible for concurrently running multiple processes (on one or more CPU cores/processors)

- Create, run, terminate a process

- Context switch from one process to another

- Handle any events (e.g., system calls from process)

OS maintains all information about an active process in a process control block (PCB)

- Set of PCBs of all active processes is a critical kernel data structure

- Maintained as part of kernel memory (part of RAM that stores kernel code and data, more on this later)

PCB is known by different names in different OS

-

structprocin xv6 -

task_structin Linux

PCB in xv6: struct proc

The different states of a process in xv6 (procstate) are given by UNUSED, EMBRYO (new), SLEEPING (blocked), RUNNABLE (ready), RUNNING, ZOMBIE (dead)

The struct proc has

- Size of the process

- Pointer to the apge table

- Bottom of the kernel stack for this process

- Process state

- Process ID

- Parent process

- Pointer to folder in which process is running

- Some more stuff which we will study later

Kernel Stack

Register state (CPU context) is saved on user stack during the function calls to restore/resume later. Likewise, the CPU context is stored on kernel stack when process jumps into OS to run kernel code.

We use a separate stack because the OS does not trust the user stack. It is a separate area of memory per process within the kernel, not accessible by regular user code. It is linked from struct proc of a process.

List of open files

Array of pointers to open files (struct file has info about the open file)

- When user opens a file, a new entry is created in this array, and the index of that entry is passed as a file descriptor to user

- Subsequent read/write calls on a file use this file descriptor to refer to the file

-

First 3 files (array indices 0,1,2) open by default for every process: standard input, output and error

- Subsequent files opened by a process will occupy later entries in the array

Page table

Every instruction or data item in the memory image of process has an address. Page table of a process maintains a mapping between the virtual addresses and physical addresses.

Process table (ptable) in xv6

It has a lock for protection. It is an array of all processes. Real kernels have dynamic-sized data structures. However, xv6 being a dummy OS, has a static array.

A CPU scheduler in the OS loops over all runnable processes, picks one, and sets it running on the CPU.

Process state transtition examples

A process that needs to sleep will set its state to SLEEPING and invoke scheduler.

A process that has run for its fair share will set itself to RUNNABLE and invoke Scheduler. The Scheduler will once again find another RUNNABLE process and set it to RUNNING.

Live Session 1

- Real memory is less than virtual memory. It is easier to let the process think it has the whole memory rather than telling it how much memory it exactly has.

- Memory for Global variables and Function variables is allocated once! We don’t know how many times each function will be called. Therefore, we just assign it once and use the allocated space for repeated calls.

- We have caller and callee registers for storing existing computations in the registers before a function call. We can’t have only one set (callee or caller) store all these values due to some subtle reasons. A caller save register would have to pass some arguments. A callee save register would have to return some arguments. To avoid all this, we have a separate set of caller and callee registers.

- “Only one process can run on a core at any time”. Basically that the OS sees the processor as two different cores in hyper-threading. Therefore, it can run a single process on a core.

- xv6 is primarily written in C. If we need an OS to compile and run a program, how do we run xv6? Whenever you boot a system with an OS, you use an existing OS to build the binaries for the needed OS. Then, you run the binary to run the OS. To answer the chicken and egg problem, someone might’ve written an OS in assembly code initially.

- OS keeps track of locations of memory images using page tables.

- Virtual addresses of an array will be contiguous, but the OS may not allocate contiguous memory.

- When we print the address of a pointer, we get the virtual address of the variable. Exposing the real address is a security risk.

Lecture 3 - Process API

We will discuss the API that the OS provides to create and manage processes.

What is the API?

So, the API refers to the functions available to write user programs. The API provided by the OS is a set of system calls. Recall, a system call is like a function call, but it runs at a higher privilege level. System calls can perform sensitive operations like access to hardware. Some “blocking” system calls cause the process to be blocked and unscheduled (e.g., read from disk).

Do we have to rewrite programs for each OS?

No, we don’t. This is possible due to the POSIX API. This is a standard set of system calls that OS must implement. Programs written to the POSIX API can run on any POSIX compatible OS. Almost every modern OS has this implemented, which ensures program portability. Program language libraries hide the details of invoking system calls. This way, the user does not have to worry about explicitly invoking system calls.

For ex, the printf function in the C library calls the write system call to write to the screen.

Process related system calls (in Unix)

The most important system call to create a process is the fork() system call. It creates a new child process. All processes are created by forking from a parent. The init process is the ancestor of all processes. When the OS boots up, it creates the init process.

exec() makes a process execute a given executable. exit() terminates a process, and wait() causes a parent to block until a child terminates. Many variants of the above system calls exist with different arguments.

What happens during a fork?

A new process is created by making a copy of the parent’s memory image. This means the child’s code is exactly the same as the parent’s code. The new process is added to the OS process list and scheduled. Parent and child start execution just after the fork statement (with different return values).

Note that parent and child execute and modify the memory data independently (the memory images being a copy of one another does not propagate).

The return values for fork() are set as follows:

-

0for the child process -

< 0ifforkfailed -

PID of the childin the parent

Terminating child processes

A process can be terminated in the following situations

- The process calls

exit()(exit()is called automatically when the end of main is reached) - OS terminated a misbehaving process

The processes are not immediately deleted from the process list upon termination. They exist as zombies. These are cleared out when a parent calls wait(NULL). A zombie child is then cleaned up or “reaped”.

wait() blocks the parent until the child terminates. There are some non-blocking ways to invoke wait.

What if the parent terminates before its child? init process adopts orphans and reaps them. Dark, right? If the init process does not do this, zombies will eat up the system memory. Why do we need zombies? (Too many brains in the world). There are subtle reasons for this, which are out of scope for this discussion.

What happens during exec?

After forking, the parent and the child are running the same code. This is not useful! A process can run exec() to load another executable to its memory image. This allows a child to run a different program from the parent. There are variants of exec(), e.g., execvp(), to pass command-line arguments to the new executable.

Case study: How does a shell work?

In a basic OS, the init process is created after the initialization of hardware. The init spawns a lot of new processes like bash. bash is a shell. A shell reads user commands, forks a child, execs the command executable, waits for it to finish, and reads the next command.

Standard commands like ls are all executables that are simply exec‘ed by the shell.

More funky things about the shell

A shell can manipulate the child in strange ways. For example, you can redirect the output from a command to a file.

prompt > ls > foo.txt

This is done via spawning a child, rewires its standard output to a file, and then calls the executable.

close(STDOUT_FILENO);

open("./p4.output", O_CREAT|O_WRONLY|O_TRUNC, S_IRWXU);

We can similarly modify the input for a process.

Lecture 23 - System calls for process management in xv6

Process system calls: Shell

When xv6 boots up, it starts the init process (the first user process). Init forks shell, which prompts for input. This shell process is the main screen that we see when we run xv6.

Whenever we run a command in the shell, the shell creates a new child process, executes it, waits for the child to terminate, and repeats the whole process again. Some commands have to be executed by the parent process itself and not by the child. For example, cd command should change the parent’s (shell) current directory, not of the child. Such commands are directly executed by the shell itself without forking a new process.

What happens on a system call?

All the system calls available to the users are defined in the user library header ‘user.h’. This is equivalent to a C library header (xv6 doesn’t use a standard C library). System call implementation invokes a special trap instruction called int in x86. All the system calls are defined in “usys.S”.

The trap (int) instruction causes a jump to kernel code that handles the system call. Every system call is associated with a number which is moved into eax to let the kernel run the applicable code. We’ll learn more about this later, so don’t worry about this now.

Fork system call

Parent allocates new process in ptable, copies parent state to the child. The child process set is set to runnable, and the scheduler runs it at a later time. Here is the implementation of fork()

int

fork(void)

{

int i, pid;

struct proc *np;

struct proc *curproc = myproc();

// Allocate process.

if((np = allocproc()) == 0){

return −1;

}

// Copy process state from proc.

if((np−>pgdir = copyuvm(curproc−>pgdir, curproc−>sz)) == 0){

kfree(np−>kstack);

np−>kstack = 0;

np−>state = UNUSED;

return −1;

}

np−>sz = curproc−>sz;

np−>parent = curproc;

*np−>tf = *curproc−>tf;

// Clear %eax so that fork returns 0 in the child.

np−>tf−>eax = 0;

for(i = 0; i < NOFILE; i++)

if(curproc−>ofile[i])

np−>ofile[i] = filedup(curproc−>ofile[i]);

np−>cwd = idup(curproc−>cwd);

safestrcpy(np−>name, curproc−>name, sizeof(curproc−>name));

// Set new pid

pid = np−>pid;

acquire(&ptable.lock);

// Set Process state to runnable

np−>state = RUNNABLE;

release(&ptable.lock);

// Fork system call returns with child pid in parent

return pid;

}

Exec system call

The source code is a little bit complicated. The key steps include

- Copy new executable into memory, replacing the existing memory image

- Create new stack, heap

- Switch process page table to use the new memory image

- Process begins to run new code after system call ends

Exit system call

Exiting a process cleans up the state and passes abandoned children to init. It marks the current process as a zombie and invokes the scheduler.

void

exit(void)

{

struct proc *curproc = myproc();

struct proc *p;

int fd;

if(curproc == initproc)

panic("init exiting");

// Close all open files.

for(fd = 0; fd < NOFILE; fd++){

if(curproc−>ofile[fd]){

fileclose(curproc−>ofile[fd]);

curproc−>ofile[fd] = 0;

}

}

begin_op();

iput(curproc−>cwd);

end_op();

curproc−>cwd = 0;

acquire(&ptable.lock);

// Parent might be sleeping in wait().

wakeup1(curproc−>parent);

// Pass abandoned children to init.

for(p = ptable.proc; p < &ptable.proc[NPROC]; p++){

if(p−>parent == curproc){

p−>parent = initproc;

if(p−>state == ZOMBIE)

wakeup1(initproc);

}

}

// Jump into the scheduler, never to return.

curproc−>state = ZOMBIE;

// Invoke the scheduler

sched();

panic("zombie exit");

}

Remember, the complete cleanup happens only when a parent reaps the child.

Wait system call

It must be called to clean up the child processes. It searches for dead children in the process table. If found, it cleans up the memory and returns the PID of the dead child. Otherwise, it sleeps until one dies.

int

wait(void)

{

struct proc *p;

int havekids, pid;

struct proc *curproc = myproc();

acquire(&ptable.lock);

for(;;){

// Scan through table looking for exited children.

havekids = 0;

for(p = ptable.proc; p < &ptable.proc[NPROC]; p++){

if(p−>parent != curproc)

continue;

havekids = 1;

if(p−>state == ZOMBIE){

// Found one.

pid = p−>pid;

kfree(p−>kstack);

p−>kstack = 0;

freevm(p−>pgdir);

p−>pid = 0;

p−>parent = 0;

p−>name[0] = 0;

p−>killed = 0;

p−>state = UNUSED;

release(&ptable.lock);

return pid;

}

}

// No point waiting if we don’t have any children.

if(!havekids || curproc−>killed){

release(&ptable.lock);

return −1;

}

// Wait for children to exit. (See wakeup1 call in proc_exit.)

sleep(curproc, &ptable.lock);

}

}

Summary of process management system calls in xv6

- Fork - process marks new child’s

struct procasRUNNABLE, initializes child memory image and other states that are needed to run when scheduled - Exec - process reinitializes memory image of user, data, stack, heap, and returns to run new code.

- Exit - process marks itself as

ZOMBIE, cleans up some of its state, and invokes the scheduler - Wait - parent finds any

ZOMBIEchild and cleans up all its state. IF no dead child is found, it sleeps (marks itself asSLEEPINGand invokes scheduler).

When do we call wait in the parent process?

Lecture 4 - Mechanism of process execution

In this lecture, we will learn how an OS runs a process. How it handles system calls, and how it context switches from one process to the other. We are going to understand the low-level mechanisms of these.

Process Execution

The first thing the OS does is, it allocates memory and create a memory image. It also sets up the program counter and other registers. After the setup, the OS is out of the way, and the process executes directly on the CPU by itself.

A simple function call

A function call translates to a jump instruction. A new stack frame is pushed onto the stack, and the stack pointer (SP) is updated. The old value of the PC (return value) is pushed to the stack, and the PC is updated. The stack frame contains return value, function arguments, etc.

How is a system call different?

The CPU hardware has multiple privilege levels. The user mode is used to run the user code. OS code like the system calls run in the kernel mode. Some instructions execute only in kernel mode.

The kernel does not trust the user stack. It uses a separate kernel stack when in kernel mode. The kernel also does not trust the user-provided addresses. It sets up an Interrupt Descriptor Table (IDT) at boot time. The IDT has addresses of kernel functions to run for system calls and other events.

Mechanism of a system call: trap instruction

A special trap instruction is run when a system call must be made (usually hidden from the user by libc). The trap instruction initially moves the CPU to a high privilege level. The stack pointer is updated to switch to the kernel stack. Here, the context, such as old PC, registers, etc., is saved. Then, the address of the system call is looked up in the IDT, and the PC jumps to the trap handler function in the OS code.

The trap instruction is executed on the hardware in the following cases -

- System call - Program needs OS service

- Program faults - Program does something illegal, e.g., access memory that it doesn’t have access to

- Interrupt events - External device needs the attention of OS, e.g., a network packet has arrived on the network card.

In all of the cases, the mechanism is the same as described above. The IDT has many entries. The system calls/interrupts store a number in a CPU register before calling trap to identify which IDT entry to use.

When the OS is done handling the syscall or interrupt, it calls a special instruction called return-from-trap. It undoes all the actions done by the trap instruction. It restores the context of CPU registers from the kernel stack. It changes the CPU privilege from kernel mode to user mode, and it restores the PC and jumps to user code after the trap call.

The user process is unaware that it was suspended, and it resumes execution as usual.

Before returning to the user mode, the OS checks if it has to switch back to the same process or another process. Why do we want to do this? Sometimes when the OS is in kernel mode, it cannot return back to the same process it left. For example, when the original process has exited, it must be terminated (e.g., due to a segfault) or when the process has made a blocking system call. Sometimes, the OS does not want to return back to the same process. Maybe the process has run for a long time, Due to the timesharing responsibility, the OS switches to another process. In such cases, OS is said to perform a context switch to switch from one process to another.

OS scheduler

The OS scheduler is responsible for the context switching mechanism. It has two parts - A policy to pick which process to run and a mechanism to switch to that process. There are two different types of schedulers.

A non-preemptive (cooperative) scheduler is polite. It switches only when a process is blocked or terminated. On the other hand, a preemptive (non-cooperative) schedulers can switch even when the process is ready to be continued. The CPU generates a periodic timer interrupt to check if a process has run for too long. After servicing an interrupt, a preemptive scheduler switches to another process.

Mechanism of context switch

Suppose a process A has moved from the user to kernel mode, and the OS decides it must switch from A to B. Now, the OS’s first job is saving the context (PC, registers, kernel stack pointer) of A on the kernel stack. Then, the kernel stack pointer is switched to B, and B’s context is restored from B’s kernel stack. This context was saved by the OS when it switched out of B in the past. Now, the CPU is running B in kernel mode, return-from-trap to switch to user mode of B.

A subtlety on saving context

The context (PC and other registers) of a process is saved on the kernel stack in two different scenarios.

When the OS goes from user mode to kernel mode, user context (e.g., which instruction of user code you stopped at) is saved on the kernel stack by the trap instruction. This is later restored using the return-from-trap instruction. The other scenario where you store the context is during a context switch. The kernel context (e.g., where you stopped in the OS code) of process A is saved on the kernel stack of A by the context-switching code, and B’s context is restored.

Live Session 2

-

As the shell user, you don’t have to call

fork,exec,waitandexit. The shell automatically takes care of this. -

The stack pointer’s current location is stored in a general-purpose register before jumping from the user stack to the kernel stack.

-

Why don’t we create an empty system image for a child process? Some instructions (in Windows) require the child to run the parent’s code. We can’t initialize an empty image. There are some advantages to copying the memory image of the parent into the child. The modern OSs utilize copy on demand.

When a child is created, the PC points to the instruction after

fork(). This prevents the OS from callingfork()recursively.fork()can return \(-1\) instead of the child’s PID if the process creation fails. -

wait()reaps only a single child process. We need to call it again if we need to reap more children. With some variants ofwait(), we can delete a specific child.wait()is designed this way so that the parent knows which child has been reaped. This information is important for later instructions. Returning an array of variable size is not a feasible option.

Lecture 24 - Trap Handling

What is a trap? In certain scenarios, the user programs have to trap into the OS/kernel code. The following events cause a user process to “trap” into the kernel (xv6 refers to these events as traps)

- System calls - requests by user for OS services

- Interrupts - External device wants attention

- Program fault - Illegal action by a program

When any of the above events happen, the CPU executes the special int instruction. The user program is blocked. A trap instruction has a parameter int n, indicating the type of interrupt. For example, syscall has a different value for \(n\) from a keyboard interrupt.

Before the trap instruction is executed, eip points to the user program instruction, and esp to user stack. When an interrupt occurs, the CPU executes the following step s as part of the int n instruction -

- Fetch the \(n\)th entry interrupt descriptor table (CPU knows the memory address of IDT)

- Save stack pointer (

esp) to an internal register - Switch

espto the kernel stack of the process (CPU knows the location of the kernel stack of the current process - task state segment) - On the kernel stack, save the old

esp,eip, etc. - Load the new

eipfrom the IDT, which points to the kernel trap handler.

Now, the OS is ready to run the kernel trap handler code on the process’s kernel stack. Few details have been omitted above -

- Stack, code segments (

cs,ss), and a few other registers are also saved. - Permission check of CPU privilege levels in IDT entries. For example, a user code can invoke the IDT entry of a system call but not of a disk interrupt.

- Suppose an interrupt occurs when the CPU is already handling a previous interrupt. In that case, we don’t have to save the stack pointer again.

Why a separate trap instruction?

Why can’t we simply jump to kernel code, like we jump to the code of a function in a function call? The reasons are as follows -

- The CPU is executing the user code at a lower privilege level, but the OS code must run at a higher privilege.

- The user program cannot be trusted to invoke kernel code on its own correctly.

- Someone needs to change the CPU privilege level and give control to the kernel code.

- Someone also needs to switch to the secure kernel stack so that the kernel can start saving the state.

Trap frame on the kernel stack

Trap frame refers to the state pushed on the kernel stack during trap handling. This state includes the CPU context of where execution stopped and some extra information needed by the trap handler. The int n instruction pushes only the bottom few entries of the trap frame. The kernel code pushes the rest.

Kernel trap handler (alltraps)

The IDT entries for all interrupts will set eip to point to the kernel trap handler alltraps. The alltraps assembly code pushes the remaining registers to complete the trap frame on the kernel stack. pushal pushes all the general-purpose registers. It also invokes the C trap handling function named trap. The top of the trap frame (current top of the stack - esp) is given as an argument to the C function.

The convention of calling C functions is to push the arguments on to the stack and then call the function.

C trap handler function

The C trap handler performs different actions based on the kind of trap. For example, say we have to execute a system call. The function invokes int n. The system call number is taken from the register eax (whether fork, exec, etc.). The return value of the syscall is stored in eax after execution.

Suppose we have an interrupt from a device; the corresponding device-related code is called. The trap number is different for different devices. A timer interrupt is a special hardware interrupt, and it is generated periodically to trap to the kernel. On a timer interrupt, a process yields CPU to the scheduler. This interrupt ensures a process does not run for too long.

Return from trap

The values from the kernel stack have to be popped. The return from trap instruction iret does the opposite of int. It pops the values and changes the privilege level back to a lower level. Then, the execution of the pre-trap code can resume.

Summary of xv6 trap handling

- System calls, program faults, or hardware interrupts cause the CPU to run

int ninstruction and “trap” to the OS. - The trap instruction causes the CPU to switch

espto the kernel stack,eipto the kernel trap handling code. - The pre-trap CPU state is saved on the kernel stack in the trap frame. This is done both by the

intinstruction and thealltrapscode. - The kernel trap handler handles the trap and returns to the pre-trap process.

Lecture 25 - Context Switching

Before we understand context switching, we need to understand the concepts related to processes and schedulers in xv6. In xv6, every CPU has a attribute called a scheduler thread. It is a special process that runs the scheduler code. The scheduler goes over the list of processes and switches to one of the runnable processes. after running for sometime, the process switches back to the scheduler thread. This can happen in the following 3 ways -

- Process has terminated

- Process needs to sleep

- Process yields after running for a long time

A context switch only happens when the process is already in the kernel mode.

Scheduler and sched

The scheduler switches to a user process in the scheduler function. User processes switch to the scheduler thread in the sched function (invoked from exit, sleep, yield).

void

scheduler(void)

{

struct proc *p;

struct cpu *c = mycpu();

c−>proc = 0;

for(;;){

// Enable interrupts on this processor.

sti();

// Loop over process table looking for process to run.

acquire(&ptable.lock);

for(p = ptable.proc; p < &ptable.proc[NPROC]; p++){

if(p−>state != RUNNABLE)

continue;

// Switch to chosen process. It is the process’s job

// to release ptable.lock and then reacquire it

// before jumping back to us.

c−>proc = p;

switchuvm(p);

p−>state = RUNNING;

swtch(&(c−>scheduler), p−>context);

switchkvm();

// Process is done running for now.

// It should have changed its p−>state before coming back.

c−>proc = 0;

}

release(&ptable.lock);

}

}

The sched function is as follows

void

sched(void)

{

int intena;

struct proc *p = myproc();

if(!holding(&ptable.lock))

panic("sched ptable.lock");

if(mycpu()−>ncli != 1)

panic("sched locks");

if(p−>state == RUNNING)

panic("sched running");

if(readeflags()&FL_IF)

panic("sched interruptible");

intena = mycpu()−>intena;

swtch(&p−>context, mycpu()−>scheduler);

mycpu()−>intena = intena;

}

The high level view is

When does a process call sched?

Yield - A timer interrupt occurs when a process has run for a long enough time.

Exit - The process has exit and set itself as zombie.

Sleep - A process has performed a blocking action and set itself to sleep.

struct context

This structure is saved and restored during a context switch. It is basically a set of registers to be saved when switching from one process to another. For example, we must save eip which signifies where the process has stopped. The context is pushed onto the kernel stack and the struct proc maintains a pointer to the context structure on the stack.

Now, the obvious question is “what is the difference between this and the trap frame?” We shall look into it now.

Context structure vs. Trap frame

The trapframe (p -> tf) is saved when the CPU switches to the kernel mode. For example, eip in the trapframe is the eip value where the syscall was made in the user code. On the other hand, the context structure is saved when process switches to another process. For example, eip value when swtch is called. Both these structures reside on the kernel stack and struct proc has pointers to both of them. Although, they differ in the content they store. This sort of clears up the confusion in the subtlety of memory storage in the kernel stack.

swtch function

This function is invoked both by the CPU thread and the process. It takes two arguments, the address of the pointer of the old context and the pointer of the new context. We are not sending the address of the new context, but the context pointer itself.

// When invoked from the scheduler: address of scheduler's context pointer, process context pointer

swtch(&(c -> scheduler), p -> context);

// When invoked from sched: address of process context pointer, scheduler context pointer

swtch(&p -> context, mycpu() -> scheduler);

When a process/thread has invoked the swtch, the stack has caller save registers and the return address (eip). swtch does the following -

- Push the remaining (callee save) registers on the old kernel stack.

- Save the pointer to this context into the context structure pointer of the old process.

- Switch

espfrom the old kernel stack to the new kernel stack. -

espnow points to the saved context of new process. This is the primary step of a context switch. - Pop the callee-save registers from the new stack.

- Return from the function call by popping the return address after the callee save registers.

The assembly code of swtch is as follows -

# Context switch

void swtch(struct context **old, struct context *new);

Save the current registers on the stack, creating

a struct context, and save its address in *old.

Switch stacks to new and pop previously−saved registers.

.globl swtch

swtch:

movl 4(%esp), %eax

movl 8(%esp), %edx

# Save old callee−saved registers

pushl %ebp

pushl %ebx

pushl %esi

pushl %edi

# Switch stacks

# opposite order compared to MIPS

# movl src dst

movl %esp, (%eax)

movl %edx, %esp

# Load new callee−saved registers

popl %edi

popl %esi

popl %ebx

popl %ebp

ret

eax has the address of the pointer to the old context and edx has the pointer to the new context.

Why address of pointer?

Summary of context switching in xv6

The old process, say P1, goes into the kernel mode and gives up the CPU. The new process, say P2, is ready to run. P1 switches to the CPU scheduler thread. The scheduler thread finds P2 and switches to it. Then, P2 returns from trap to user mode. The process of switching from one process/thread to another involves the following steps. All the register states (CPU context) on the kernel stack of the old process are saved. The context structure pointer of the old process is updated to this saved context. Then, esp moves from the old kernel stack to the new kernel stack. Finally, the register states are restored in the new kernel stack to resume the new process.

Lecture 26 - User process creation

We know that the init process is created when xv6 boots up. The init process forks a shell process, and the shell is used to spawn any user process. The function allocproc is called during both init process creation and in fork system call.

allocproc

It iterates over the ptable, finds an unused entry, and marks it as an embryo. This entry is later marked as runnable after the process creation completes. It also allocates a new PID for the process. Then, allocproc has to allocate space on the kernel stack. To do this, we start from the bottom of the stack and find some free space. We leave room for the trap frame. Then, we push the return address of trapret and also the context structure with eip pointing to the function forkret. When the new process is scheduled, it begins execution at forkret, returns to trapret, and finally returns from the trap to the user space.

The role of allocproc is to create a template kernel stack, and make the process look like it had a trap and was context switched out in the past. This is done so that the scheduler can switch to this process like any other.

Where is kernel mode? The sp points to the kernel stack.

allocproc(void)

{

struct proc *p;

char *sp;

acquire(&ptable.lock);

for(p = ptable.proc; p < &ptable.proc[NPROC]; p++)

if(p−>state == UNUSED)

goto found;

release(&ptable.lock);

return 0;

found:

p−>state = EMBRYO;

p−>pid = nextpid++;

release(&ptable.lock);

// Allocate kernel stack.

if((p−>kstack = kalloc()) == 0){

p−>state = UNUSED;

return 0;

}

sp = p−>kstack + KSTACKSIZE;

// Leave room for trap frame.

sp −= sizeof *p−>tf;

p−>tf = (struct trapframe*)sp;

// Set up new context to start executing at forkret,

// which returns to trapret.

sp −= 4;

*(uint*)sp = (uint)trapret;

sp −= sizeof *p−>context;

p−>context = (struct context*)sp;

memset(p−>context, 0, sizeof *p−>context);

p−>context−>eip = (uint)forkret;

return p;

}

Init process creation

The userinit function is called to create the init process. This is also done using the allocproc function. The trapframe of init is modified, and the process is set to be runnable. The init program opens STDIN, STDOUT and STDERR files. These are inherited by all subsequent processes as child inherits parent’s files. It then forks a child, execs shell executable in the child, and waits for the child to die. It also reaps dead children.

Forking a new process

Fork allocates a new process via allocproc. The parent memory image and the file descriptors are copied. Take a look at the fork code while you’re reading this. The trapframe of the child is copied from that of the parent. This allows the child execution to resume from the next instruction after fork(). Only the return value in eax is changed so that the child returns its PID. The state of the new child is set to runnable, and the parent returns normally from the trap/system call.

Summary of new process creation

New processes are created by marking a new entry in the ptable as runnable after configuring the kernel stack, memory image etc of the new process. The kernel stack of the new process is made to look like that of a process that had been context switched out in the past.

Live Session 3

-

What is a scheduler thread? It is a kernel process that is a part of the OS. It always runs in kernel mode, and it is not a user -level process.

-

int nis a hardware instruction and is enabled by a hardware descriptor language. It is similar to, say, theaddinstruction. Why is it a hardware instruction? We don’t trust the software. As it is a hardware instruction, we can trust the CPU maker to bake the chip correctly. -

The

trapframehas the user context, and the context structure has the kernel context. Every context switch is preceded by a trap instruction. A context switch only happens in the kernel mode. Now, what if a user process does not go to the kernel mode ever? The timer interrupt will take care of this. It will prompt the process to go into trap mode if the process has run for too long. -

In the subtlety on saving context, the first point refers to storing the trapframe. The second point refers to storing the context structure.

-

forkretis a small function that a program has to execute after a process has been created. This mainly involves the locking mechanism that will be discussed later.forkis a wrapper ofallocproc -

schedis only called by the user processes in case ofsleep,exit, andyield.schedstores the context of the current context onto the kernel stack. Theschedulerfunction is called by the scheduler thread to switch to a new process. Both of these functions call theswtchfunction to switch the current process. Think of the CPU scheduler (scheduler thread) as an intermediate process that has to be executed when switching from process A to process B.Why do we need this intermediate process? Several operating systems make do without a scheduler thread. This is modular, and xv6 chose this methodology. The

schedulerfunction simply uses the Round Robin algorithm.

Lecture 5 - Scheduling Policy

What is a scheduling policy? It is a program that decides which program to run at a given time from the set of ready processes. The OS scheduler schedules the CPU requests (bursts) of processes. CPU burst refers to the CPU time used by a process in a continuous stretch. For example, if a process comes back after an I/O wait, it counts as a fresh CPU burst.

Our goal is to maximize CPU utilization, and minimize the turnaround time of a process. The turnaround time of a process refers to the time between the process’s arrival and its completion. The scheduling policy must also minimize the average response time, from process arrival to the first scheduling. We should also treat all processes fairly and minimize the overhead. The amortized cost of a context switch is high.

We shall discuss a few scheduling policies and their pros/cons below.

First-In-First-Out (FIFO)

Schedule the processes as an when they arrive in the CPU. The drawback of this method is the convoy effect. In this situation, a process takes high time to execute and effectively increases the turnaround time.

Shortest Job First (SJF)

This is provably optimal when all processes arrive together. Although, this is a non-preemptive policy. Short processes can still be stuck behind the long ones when the long process arrives first.

Shortest Time-to-Completion First

This is a preemptive version of SJF. This policy preempts the running task is the remaining time to execute the process is more than that of the new arrival. This method is also called the Shortest Remaining Time First (SRTF).

How do we know the running time/ time left of a process? We schedule processes in bursts! No, that’s wrong! See this

Round Robin (RR)

Every process executes for a fixed quantum slice. The slice is big enough to amortize the cost of a context switch. This policy is also preemptive! It has a good response time and is fair. Although, it may have high turnaround times.

Schedulers in Real systems

Real schedulers are more complex. For example, Linux uses a policy called Multi-Level Feedback Queue (MLFQ). You maintain a set of queues and prioritize them. A process is picked from the highest priority queue. Processes in the same priority level are scheduled using a policy like RR. The priority of a queue decays with its age.

Lecture 6 - Inter-Process Communication (IPC)

In general, two processes do not share any memory with each other. Some processes might want to work together for a task, and they need to communicate information. The OS provides IPC mechanisms for this purpose.

Types of IPC mechanisms

Shared Memory

Two processes can get access to the same region of the memory via shmget() system call.

int shmget(key_t key, int size, int shmflg)

By providing the same key, two processes can get the same segment of memory.

How do they know keys?

Although, when realizing this idea, we need to consider some problematic scenarios. For example, we need to take care that one process is not overwriting another’s data.

Signals

These are well-defined messages that can be sent to processes that are supported by the OS. Some signals have a fixed meaning. For example, a signal to terminate a process. Some signals can also be user-defined. A signal can be sent to a process by the OS or another process. For example, when you type Ctrl + C, the OS sends SIGINT signal to the running process.

These signals are handled by a signal handler. Every process has a default code to execute for each signal. Some (Can’t edit the terminate signal) of these signal handlers can be overridden to do other things. Although, you cannot send messages/bytes in this method.

Sockets

Sockets can be used for two processes on the same machine or different machines to communicate. For example, TCP/UDP sockets across machines and Unix sockets in a local machine. Processes open sockets and connect them to each other. Messages written into one socket can be read from another. The OS transfers the data across socket buffers.

Pipes

These are similar to sockets but are half-duplex - information can travel only in one direction. A pipe system call returns two file descriptors. These are simply handles that can read and write into files (read handle and write handle). The data written in one file descriptors can be read through another.

In regular pipes, both file descriptors are in the same process. How is this useful? When a parent forks a child process, the child process has access to the same pipe. Now, the parent can use one end of the pipe, and the child can use the other end.

Named pipes can be used to provide two endpoints a pipe to different processes. The pipe data is buffered in OS (kernel) buffers between write and read.

Message Queues

This is an abstraction of a mailbox. A process can open a mailbox at a specified location and send/receive messages from the mailbox. The OS buffers messages between send and receive.

Blocking vs. non-blocking communication

Some IPC actions can block

- reading from a socket/pip that has no data, or reading from an empty message queue

- Writing to a full socket/pip/message queue

The system calls to read/write have versions that block or can return with an error code in case of a failure.

Lecture 7 - Introduction to Virtual memory

The OS provides a virtualized view of the memory to a user process. Why do we need to do this? Because the real view of memory is messy! In the olden days, the memory had only the code of one running process and the OS code. However, the contemporary memory structure consists of multiple active processes that timeshare the CPU. The memory of a single process need not be contiguous.

The OS hides these messy details of the memory and provides a clean abstraction to the user.

The Abstraction: (Virtual) Address Space

Every process assumes it has access to a large space of memory from address - to a MAX value. The memory of a process, as we’ve seen earlier, has code (and static data), heap (dynamic allocations), and stack (function calls). Stack and heap of the program grow during runtime. The CPU issues “loads” and “stores” to these virtual addresses. For example, when you print the address of a variable in a program, you get the virtual addresses!

Translation of addresses

The OS performs the address translation from virtual addresses (VA) to physical addresses (PA) via a memory hardware called Memory Management Unit (MMU). The OS provides the necessary information to this unit. The CPU loads/stores to VA, but the memory hardware accesses PA.

Example: Paging

This is a technique used in all modern OS. The OS divides the virtual address space into fixed-size pages, and similarly, the physical memory is segmented into frames. To allocate memory, a page is mapped to a free physical frame. The page table stores the mappings from the virtual page number to the physical frame number for a process. The MMU has access to these page tables and uses them to translate VA to PA.

Goals of memory virtualization

- Transparency - The user programs should not be aware of the actual physical addresses.

- Efficiency - Minimize the overhead and wastage in terms of memory space and access time.

- Isolation and Protection - A user process should not be able to access anything outside its address space.

How can a user allocate memory?

The OS allocates a set of pages to the memory image of the process. Within this image

- Static/global variables are allocated in the executable.

- Local variables of a function are allocated during runtime on the stack.

- Dynamic allocation with

mallocon the heap.

Memory allocation is done via system calls under the hood. For example, malloc is implemented by a C library that has algorithms for efficient memory allocation and free space management.

When the program runs out of the initially allocated space, it grows the heap using the brk/sbrk system call. Unlike fork, exec, and wait, the programmer is discouraged from using these system calls directly in the user code.

A program can also allocate a page-sized memory using the mmap() system call and get an anonymous (empty, will be discussed later) page from the OS.

A subtlety in the address space of the OS

Where is the OS code run? OS is not a separate process with its own address space. Instead, the OS code is a part of the address space of every process. A process sees OS as a part of its code! In the background, the OS provides this abstraction. However, in reality, the page tables map the OS addresses to the OS code.

Also, the OS needs memory for its data structures. How does it allocate memory for itself? For large allocation, the OS allocates itself a page. For smaller allocations, the OS uses various memory allocation algorithms (will be discussed later). Note. The OS cannot use libc and malloc in the kernel.

Why?

Live Session 4

- The scheduler/PC does not always know the running time of the processes. Therefore, we can’t implement SJF and SRTF in practice.

- The shared key is shared offline (say, via a command-line argument) in the shared memory IPC.

- Every process has a set of virtual addresses that it can use.

mmap()is used to fetch the free virtual addresses. It is mainly used for allocating large chunks of memory (allocates pages). It can be used to get general memory and not specifically for heap. On the other hand,brkandsbrkgrow the heap in small chunks.mallocuses these two system calls for expanding memory. - Conceptually, sockets and message queues are the same. The two structures just have a different programming interface.

-

libcandmalloccan’t be used in the kernel because these are user-space libraries. The kernel has its own versions of these functions. Variants. - The C library grows the heap. The OS grows the stack. This is because the heap memory is an abstraction provided by the C libraries. The C library gets a page of memory using

mmap()and provides a small chunk of this page to the user whenmalloc()is called. Suppose the stack runs out of the allocated memory. In that case, the OS either allocates new memory and transfers all the content if required or terminates the process for using a lot of memory.

Lecture 8 - Mechanism of Address Translation

Suppose we have written a C program that initializes a variable and adds a constant to it. This code is converted into an assembly code, each instruction having an address. The virtual address space is set up by the OS during process creation.

Can we use heap from an assembly code?

The OS places the memory images in various chunks (need not be contiguous). However, we shall be considering a simplified version of an OS where the entire memory image is placed in a single chunk. We need the OS to access the physical memory given the virtual address. Also, the OS must detect an error if a program tries to access the memory that is outside the bounds.

Who performs address translation?

In this simple example, the OS can tell the hardware the base and the bound/size values. The MMU calculates PA from VA. The OS is not involved in every translation!

Basically, the CPU provides a privileged mode of execution. The instruction set has privileged instructions to set translation information (e.g., base, bound). We don’t want the user programs to be able to set this information. Then, the MMU uses this information to perform translation on every memory access. The MMU also generates faults and traps to OS when an access is illegal.

Role of OS

What does the OS do? The OS maintains a free list of memory. It allocates spaces to process during creation and cleans up when done. The OS also maintains information of where space is allocated to each process in PCBs. This information is provided to the hardware for translation. Also, the information has to be updated on context switch in the MMU. Finally, the OS handles traps due to illegal memory access.

Segmentation

The base and bound method is a very simple method to store the memory image. Segmentation is a generalized method to store each segment of the memory image separately. For example, the base/bound values of the heap, stack, etc., are stored in the MMU. However, segmentation is not popularly used. Instead, paging is used widely.

Segmentation is suitable for sparse address spaces.

Stack and heap grow in the physical address space?

Although, segmentation uses variable-sized allocation, which leads to external fragmentation - small holes in the memory left unused.

Lecture 9 - Paging

The memory image of a process is split into fixed-size chunks called pages. Each of these pages is mapped to a physical frame in the memory. This method avoids external fragmentation. Although, there might be internal fragmentation. This is because sometimes the process requires much less memory than the size of a page, but the OS allocates memory in fixed-size pages. However, internal fragmentation is a small problem.

Page Table

This is a data structure specific to a process that helps in VA-PA translation. This structure might be as simple as an array storing mappings from virtual page numbers (VPN) to physical frame numbers (PFN). This structure is stored as a part of the OS memory in PCB. The MMU has access to the page tables and uses them for address translation. The OS has to update the page table given to the MMU upon a context switch.

Page Table Entry (PTE)

The most straightforward page table is a linear page table, as discussed above. Each PTE contains PFN and a few other bits

- Valid bit - Is this page used by the process?

- Protection bits - Read/write permissions

- Present bit - Is this page in memory? (will be discussed later)

- Dirty bit - Has this page been modified?

- Accessed bit - Has this page been recently accessed?

Address translation in hardware

A virtual address can be separated into VPN and offset. The most significant bits of the VA are the VPN. The page table maps VPN to PFN. Then, PA is obtained from PFN and offset within a page. The MMU stores the (physical) address of the start of the page table, not all the entries. The MMU has to walk to the relevant PTE in the page table.

Suppose the CPU requests code/data at a virtual address. Now, the MMU has to access the physical memory to fetch code/data. As you can see, paging adds overhead to memory access. We can reduce this overhead by using a cache for VA-PA mappings. This way, we need not go to the page table for every instruction.

Translation Lookaside Buffer (TLB)

Ignore the name. Basically, it’s a cache of recent VA-PA mappings. To translate VA to PA, the MMU first looks up the TLB. If the TLB misses, the MMU has to walk the page table. TLB misses are expensive (in the case of multiple memory accesses). Therefore, a locality of reference helps to have a high hit rate. For example, a program may try to fetch the same data repeatedly in a loop.

Note. TLB entries become invalid on context switch and change of page tables.

Page table can change without context switch?

Also, this cache is not taken care of by the OS but by the architecture itself.

How are page tables stored in the memory?

A typical page table has 2^20 entries in a 32-bit architecture (32 bit VA) and 4KB pages

2^32 (4GB RAM)/ 2^12 (4KB pages)

If each PTE is 4 bytes, then page table is 4MB! How do we reduce the size of page tables? We can use large pages. Still, it’s a tradeoff.

How does the OS allocate memory for such large tables? The page table is itself split into smaller chunks! This is a multilevel page table.

Multilevel page tables

A page table is spread over many pages. An “outer” page table or page directory tracks the PFNs of the page table pages. If a page directory can’t fit in a single page, we may use more than 2 levels. For example, 64-bit architectures use up to 7 levels!

How is the address translation done in this case? The first few bits of the VA identify the outer page table entry. The next few bits are used to index into the next level of PTEs.

What about TLB misses? We need to perform multiple access to memory required to access all the levels of page tables. This is a lot of overhead!

Lecture 10 - Demand Paging

The main memory may not be enough to store all the page tables of active processes. In such cases, the OS uses a part of the disk (swap space) to store pages that are not in active use. Therefore, physical memory is allocated on demand, and this is called demand paging.

Page Fault

The present bit in the page table entry indicates if a page of a process resides in the main memory or not (swap). When translating from VA to PA, the MMU reads the present bit. If the page is present in the memory, the location is directly accessed. Otherwise, the MMU raises a trap to the OS - page fault. (No fault happened, actually).

The page fault traps OS and moves CPU to kernel mode like any other system call. Then, the OS fetches the disk address of the page and issues a “read” to the disk. How does the OS know the location of pages on the disk? It keeps track of disk addresses (say, in a page table). The OS context switches to another process, and the current process is blocked.

Suppose the CPU context switches from the MMU read from swap to another process. When it comes back to the process, the disk would have fetched the address from the swap. How does the MMU revert back to its previous state? The other process to which the CPU context switched will have used the MMU. -> See the below paragraph

Eventually, when the disk read completes, the OS updates the process’s page table and marks the process as ready. When the process is scheduled again, the OS restarts the instruction that caused the page fault.

Summary - Memory Access

The CPU issues a load to a VA for code/data. Before sending a request, the CPU checks its cache first. It goes to the main memory if the cache misses. Note. This is not the TLB cache.

After the control reaches the main memory, the MMU looks up the TLB for VA. If TLB is hit, the PA is obtained, and the code/data is returned to the CPU. Otherwise, the MMU accesses memory, walks the page table (maybe multiple levels), and obtains the entry.

- If the present bit is set in PTE, the memory is accessed. Think about this point carefully. The frame may be present in physical memory or the swap.

- The MMU raises a page fault if the present bit is not set but is valid access. The OS handles page fault and restarts the CPU load instruction

- In the case of invalid page access, trap to OS for illegal access.

Complications in page faults

What does the OS do when servicing a page fault if there is no free page to swap in the faulting page? The OS must swap out an existing page (if modified, i.e., dirty) and then swap in the faulting page. However, this is too much work! To avoid this, the OS may proactively swap out pages to keep the list of free pages. Pages are swapped out based on the page replacement policy.

Page Replacement policies

The optimal strategy would be to replace a page that is not needed for the longest time in the future. This is not practical. We can use the following policies -

FIFO Policy - Replace the page that was brought into the memory the earliest. However, this may be a popular page.

LRU/LFU - This is commonly used in practical OS. In this policy, we replace the page that was least recently (or frequently) used in the past.

Example - Page Replacement Policy

Suppose we can store only 3 frames in the physical memory, and there are 4 pages in the process. The set of accesses is also known as the reference string. Note that the initial few accesses are definitely missed, as the cache is empty - cold misses. The goal is to reduce the number of page faults, which leads to reading from the swap space and is slow.

Belady’s anomaly - The performance of the FIFO may get worse when the memory size increases.

The LRU works better as it makes use of the locality of references.

How is LRU implemented?

The OS is not involved in every memory access.

Why?

Therefore, the OS does not know which page is the LRU. There is some hardware help and some approximations which help the OS to find the LRU page. The MMU sets a bit in the PTE (accessed bit) when a page is accessed. The OS periodically looks at this bit to estimate pages that are active and inactive.

How often does the OS check this? Going through all pages also takes time. Are interrupts used?

The OS tries to find a page that does not have the access bit set to replace a page. It can also look for a page with the dirty bit not set to avoid swapping out to disk.

If the dirty bit is set, using that page would involve writing to disk. Why?

Lecture 11 - Memory Allocation Algorithms

Let us first discuss the problems with variable/dynamic size allocation. This is done from the C library - allocates one or more pages from the kernel via brk/sbrk mmap system calls. The user may ask for variable-sized chunks of memory and can arbitrarily free the used memory. The C library and the kernel (for its internal data structures) must take care of all this.

Variable sized allocation - Headers

Consider a simple implementation of malloc. An available chunk of memory is allocated on request. Every assigned piece has a header with info like chunk size, checksum/some magic number, etc. Why store size? We should know how much memory to free when free is called.

Free List

How is the free space managed? It is usually handled as a list. The library keeps track of the head of the list. The pointer to the next free chunk is embedded within the current head. Allocations happen from the head.

External Fragmentation

Suppose 3 allocations of size 100 bytes each happen. Then, the middle chunk pointed to by sptr is freed. How is the free list updated? It now has two non-contiguous elements. The free space may be scattered around due to fragmentation. Therefore, we cannot satisfy a request for 3800 bytes even though we have free space. This is the primary problem of variable allocation.

Note. The list is updated to account for the newly freed space. That is, the head is revised to point to sptr, and the list is updated accordingly. Don’t be under the false impression that we are missing out on free space.

Splitting and Coalescing

Suppose we have a bunch of adjacent free chunks. These chunks may not be adjacent in the list. If we had started out with a big free piece, we might end up with small tangled chunks. We need an algorithm that merged all the contiguous free fragments into a bigger free chunk. We must also be able to split the existing free pieces to satisfy the variable requests.

Buddy allocation for easy coalescing

Allocate memory only in sizes of power of 2. This way, 2 adjacent power-of-2 chunks can be merged to form a bigger power-of-2 chunk. That is, buddies can be combined to form bigger pieces.

Variable Size Allocation Strategies

First Fit - Allocate the first chunk that is sufficient

Best Fit - Allocate the free chunk that is closest in size to the request.

Worst Fit - Allocate the free chunk that is farthest in size. Sounds odd? It’s better sometimes as the remaining free space in the chunk is large and is more usable. For example, the best fit might allocate a 20-byte chunk for a malloc(15), and the worst might give a 100-byte chunk for the same call. Now, the 85-byte free space is more usable than the 5-byte free space.

Do we use this in the case of buddy allocation?

Fixed Size allocations

Fixed-size allocations are much simpler as they avoid fragmentation and various other problems. The kernel issues fix page-sized allocations. It maintains a free list of pages, and the pointer to the following free page is stored in the current free page itself. What about smaller allocations (e.g., PCB)? The kernel uses a slab allocator. It maintains object caches for each type of object. Within each cache, only fixed size allocation is done. Each cache is made up of one or more slabs. Within a page, we have fixed size allocations again.

Fixed size memory allocators can be used in user programs too. malloc is a generic memory allocator, but we can use other methods too.

Live Session 5

-

Dirty bit -The MMU sets this bit (unlike the present bit and valid bit, which are set by the OS) when the page is recently swapped into the memory. The OS needs this while evicting pages from the memory.

Basically, when you swap in a page frame from the disk to the main memory, you copy-paste the frame. When the page in the main memory is modified, it is not in sync with the page in the disk. At this point, the MMU sets the dirty bit.

-

A page can be in

- Swap

- Main Memory

- Unallocated

Now, note that all of the 4GB memory space need not be allocated.

When the address is not allocated at all, the valid bit is unset. Whenever a virtual address is accessed, and consequently, the memory is allocated (allocated in the main memory), the valid bit is set. This memory allocation is triggered by a page fault. Initially, the newly allocated memory has the present bit set (since we just allocated the memory, we will use it). A page is swapped into the disk when not in active use, and the present bit is unset.

-

Access bit is set whenever a page is accessed. The bit is unset periodically by the OS.

-

The device driver maintains a queue to process multiple reads/writes to the disk.

-

The OS code is mapped into the virtual address space of the processes. It has all the OS information, PCBs, etc. This way, there wouldn’t be much hassle during context switching. The page table of a process also has entries for the OS code.

The OS memory inside the process memory can increase. If it takes too much space, the modern OS has some techniques to prevent the OS space from encroaching the process’s memory space.

A PTE also has permission bits that prevent the user code from accessing the OS code. When the program has to access the OS code, the trap instruction switches us into a higher privilege level and moves us into the OS code.

After we enter the kernel mode, we still cannot modify the OS code in any way we want. This is because we enter the kernel mode using thoroughly defined system calls.

Remember, the

int ninstruction is run by the hardware. -

Every time the page table is updated, the TLB has to be updated too. This is done via special instructions.

-

The user code runs natively on the CPU. The OS asks the CPU to execute the instructions sequentially. Then, the OS is out of the picture. All the memory fetches are done via the CPU.

Now, you may think, when does the OS actually be involved in the memory access. The OS has to periodically check over the processes (maybe during access bit updates). Even when system calls are made, the OS has a play.

-

There is a hardware register where the address of the page table is stored. The MMU accesses the page table using this address.